🥇Top ML Papers of the Week

The top ML Papers of the Week (August 14 - August 20)

1). Self-Alignment with Instruction Backtranslation - presents an approach to automatically label human-written text with corresponding instruction which enables building a high-quality instruction following language model; the steps are: 1) fine-tune an LLM with small seed data and web corpus, then 2) generate instructions for each web doc, 3) curate high-quality examples via the LLM, and finally 4) fine-tune on the newly curated data; the self-alignment approach outperforms all other Llama-based models on the Alpaca leaderboard. (paper | tweet)

2). Platypus - a family of fine-tuned and merged LLMs currently topping the Open LLM Leaderboard; it describes a process of efficiently fine-tuning and merging LoRA modules and also shows the benefits of collecting high-quality datasets for fine-tuning; specifically, it presents a small-scale, high-quality, and highly curated dataset, Open-Platypus, that enables strong performance with short and cheap fine-tuning time and cost... one can train a 13B model on a single A100 GPU using 25K questions in 5 hours. (paper | tweet)

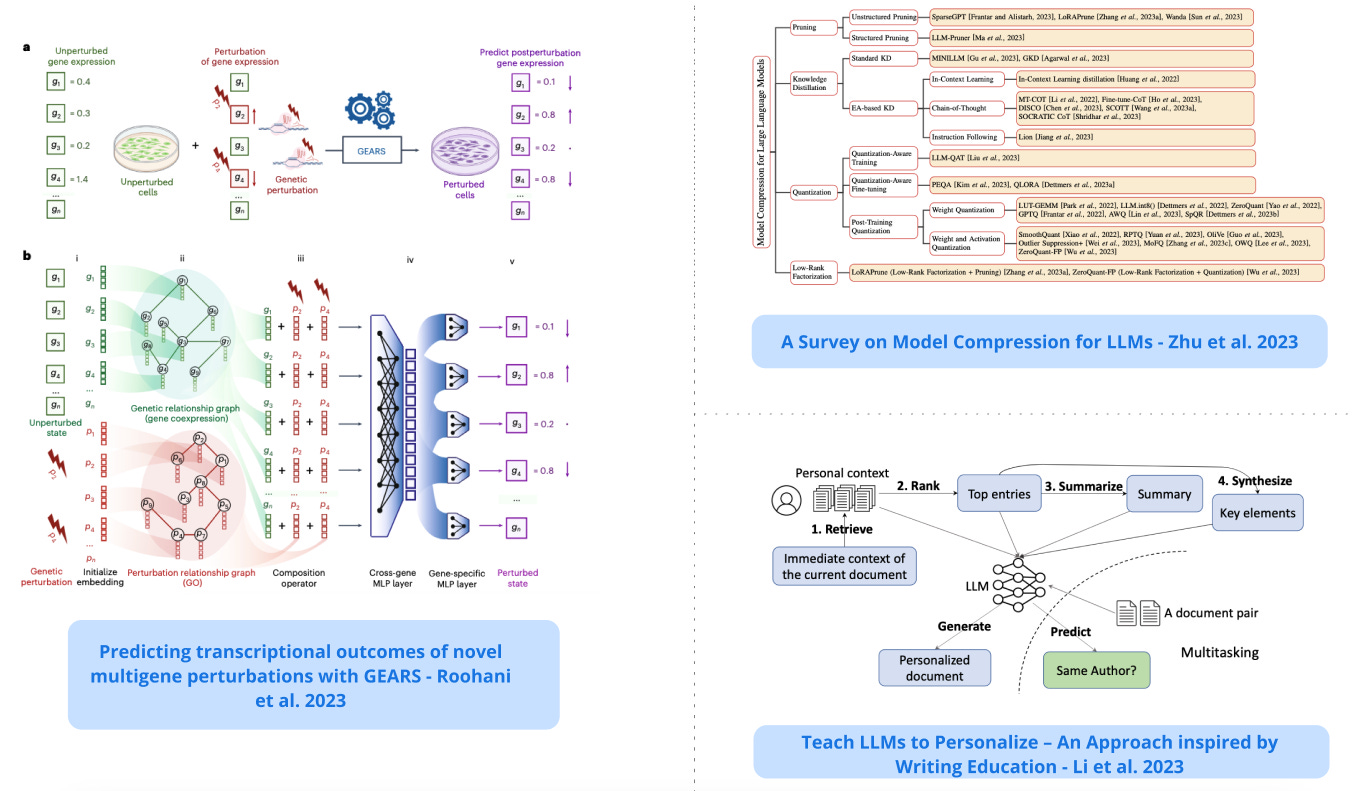

3). Model Compression for LLMs - a short survey on the recent model compression techniques for LLMs; provides a high-level overview of topics such as quantization, pruning, knowledge distillation, and more; it also provides an overview of benchmark strategies and evaluation metrics for measuring the effectiveness of compressed LLMs. (paper | tweet)

4). GEARS - uses deep learning and gene relationship knowledge graph to help predict cellular responses to genetic perturbation; GEARS exhibited 40% higher precision than existing approaches in the task of predicting four distinct genetic interaction subtypes in a combinatorial perturbation screen. (paper | tweet)

5). Shepherd - introduces a language model (7B) specifically tuned to critique the model responses and suggest refinements; this enables the capability to identify diverse errors and suggest remedies; its critiques are either similar or preferred to ChatGPT. (paper | tweet)

6). Using GPT-4 Code Interpreter to Boost Mathematical Reasoning - proposes a zero-shot prompting technique for GPT-4 Code Interpreter that explicitly encourages the use of code for self-verification which further boosts performance on math reasoning problems; initial experiments show that GPT4-Code achieved a zero-shot accuracy of 69.7% on the MATH dataset which is an improvement of 27.5% over GPT-4’s performance (42.2%). Lots to explore here. (paper | tweet)

7). Teach LLMs to Personalize - proposes a general approach based on multitask learning for personalized text generation using LLMs; the goal is to have an LLM generate personalized text without relying on predefined attributes. (paper | tweet)

8). OctoPack - presents 4 terabytes of Git commits across 350 languages used to instruction tune code LLMs; achieves state-of-the-art performance among models not trained on OpenAI outputs, on the HumanEval Python benchmark; the data is also used to extend the HumanEval benchmark to other tasks such as code explanation and code repair. (paper | tweet)

9). Efficient Guided Generation for LLMs - presents a library to help LLM developers guide text generation in a fast and reliable way; provides generation methods that guarantee that the output will match a regular expression, or follow a JSON schema. (paper | tweet)

10). Bayesian Flow Networks - introduces a new class of generative models bringing together the power of Bayesian inference and deep learning; it differs from diffusion models in that it operates on the parameters of a data distribution rather than on a noisy version of the data; it’s adapted to continuous, discretized and discrete data with minimal changes to the training procedure. (paper | tweet)

Reach out to hello@dair.ai if you would like to sponsor the next issue of the newsletter. We can help promote your AI tool, research, or company to ~10K AI researchers and practitioners.