🥇Top ML Papers of the Week

The Top ML Papers of the Week (November 18 - 24)

1). AlphaQubit - a new AI-based decoder that sets a state-of-the-art benchmark for identifying errors in quantum computers; using transformer architecture, AlphaQubit demonstrated 6% fewer errors than tensor network methods and 30% fewer errors than correlated matching when tested on the Sycamore data; shows promising results in simulations of larger systems up to 241 qubits; while this represents significant progress in quantum error correction, the system still needs improvements in speed before it can correct errors in real-time for practical quantum computing applications. (paper | tweet)

2). The Dawn of GUI Agent - explores Claude 3.5 computer use capabilities across different domains and software; they also provide an out-of-the-box agent framework for deploying API-based GUI automation models; Claude 3.5 Computer Use demonstrates unprecedented ability in end-to-end language to desktop actions. (paper | tweet)

Editor Message

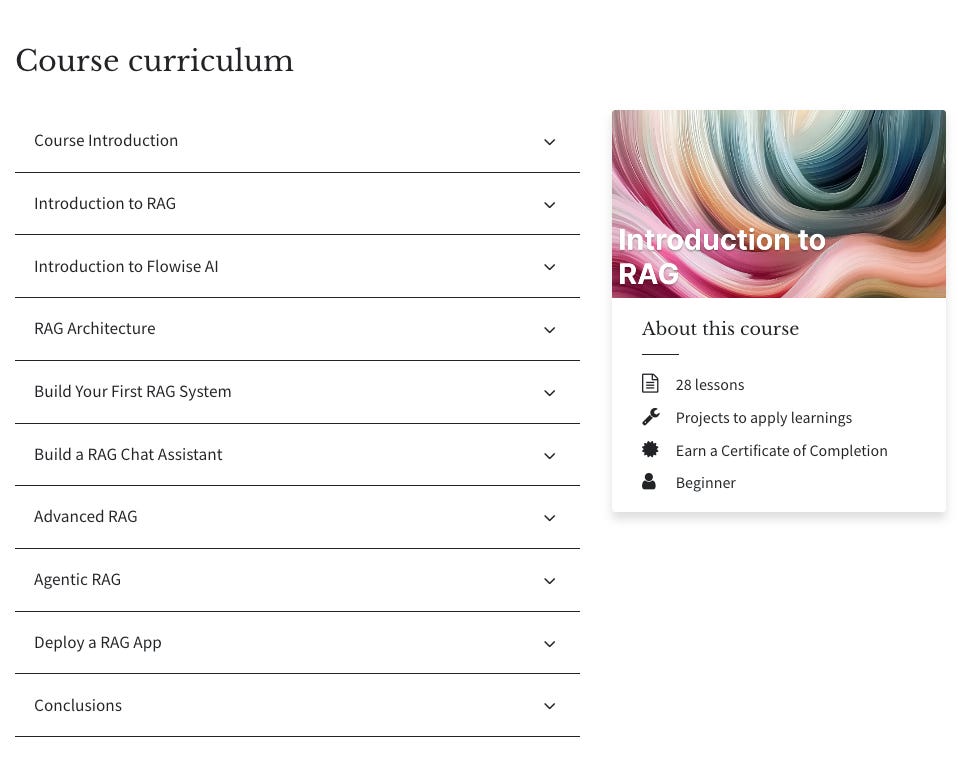

We’ve launched a new course Introduction to Retrieval Augmented Generation (RAG). It covers fundamentals, design patterns, and building advanced RAG systems ranging from chat assistants to Agentic RAG.

Use BLACKFRIDAY for a 35% discount. Offer ends 11/29.

Students and teams can reach out to training@dair.ai for special discounts.

3). A Statistical Approach to LLM Evaluation - proposes five key statistical recommendations for a more rigorous evaluation of LLM performance differences. The recommendations include: 1) using the Central Limit Theorem to measure theoretical averages across all possible questions rather than just observed averages; 2) clustering standard errors when questions are related rather than independent; 3) reducing variance within questions through resampling or using next-token probabilities; 4) analyzing paired differences between models since questions are shared across evaluations, and 5) using power analysis to determine appropriate sample sizes for detecting meaningful differences between models; the authors argue that these statistical approaches will help researchers better determine whether performance differences between models represent genuine capability gaps or are simply due to chance, leading to more precise and reliable model evaluations. (paper | tweet)

4). Towards Open Reasoning Models for Open-Ended Solutions - proposes Marco-o1 which is a reasoning model built for open-ended solutions; Marco-o1 is powered by Chain-of-Thought (CoT) fine-tuning, Monte Carlo Tree Search (MCTS), reflection mechanisms, and more recent reasoning strategies; Marco-o1 achieves accuracy improvements of +6.17% on the MGSM (English) dataset and +5.60% on the MGSM (Chinese) dataset. (paper | tweet)

5). LLM-based Agents for Automated Bug Fixing - analyzes seven leading LLM-based bug fixing systems on the SWE-bench Lite benchmark, finding MarsCode Agent (developed by ByteDance) achieved the highest success rate at 39.33%; reveals that for error localization line-level fault localization accuracy is more critical than file-level accuracy, and bug reproduction capabilities significantly impact fixing success; shows that 24/168 resolved issues could only be solved using reproduction techniques, though reproduction sometimes misled LLMs when issue descriptions were already clear; concludes that improvements are needed in both LLM reasoning capabilities and Agent workflow design to enhance automated bug fixing effectiveness. (paper | tweet)

6). Cut Your Losses in Large-Vocabulary Language Models - introduces Cut Cross-Entropy (CCE), a novel method to significantly reduce memory usage during LLM training by optimizing how the cross-entropy loss is computed; currently, the cross-entropy layer in LLM training consumes a disproportionate amount of memory (up to 90% in some models) due to storing logits for all possible vocabulary tokens. CCE addresses this by only computing logits for the correct token and evaluating the log-sum-exp over all logits on the fly using flash memory; the authors show that the approach reduces the memory footprint of Gemma 2 from 24GB to just 1MB; the method leverages the inherent sparsity of softmax calculations to skip elements that contribute negligibly to gradients; finally, it demonstrates that CCE achieves this dramatic memory reduction without sacrificing training speed or convergence, enabling larger batch sizes during training and potentially more efficient scaling of LLM training. (paper)

7). BABY-AIGS - a multi-agent system for automated scientific discovery that emphasizes falsification through automated ablation studies. The system was tested on three ML tasks (data engineering, self-instruct alignment, and language modeling), demonstrating the ability to produce meaningful scientific discoveries. However, the performance is below experienced human researchers. (paper | tweet)

8). Does Prompt Formatting Impact LLM Performance - examines how different prompt formats (plain text, Markdown, JSON, and YAML) affect GPT model performance across various tasks; finds that GPT-3.5-turbo's performance can vary by up to 40% depending on the prompt format, while larger models like GPT-4 show more robustness to format changes; argues that there is no universally optimal format across models or tasks - for instance, GPT-3.5-turbo generally performed better with JSON formats while GPT-4 preferred Markdown; models from the same family showed similar format preferences, but these preferences didn't transfer well between different model families; suggests that prompt formatting significantly impacts model performance and should be carefully considered when performing prompt engineering and model evaluation, and how to apply it to applications. (paper)

9). FinRobot - an AI agent framework for equity research that uses a multi-agent Chain-of-Thought prompting, combining data analysis with human-like reasoning to produce professional investment reports comparable to major brokerages; it leverage three agents: a Data-CoT Agent to aggregate diverse data sources for robust financial integration; the Concept-CoT Agent, for analyst’s reasoning to generate actionable insights; and the Thesis-CoT Agent to synthesizes these insights into a coherent investment thesis and report. (paper)

10). Bi-Mamba - a scalable 1-bit Mamba architecture designed for more efficient LLMs with multiple sizes across 780M, 1.3B, and 2.7B; Bi-Mamba achieves performance comparable to its full-precision counterparts (e.g., FP16 or BF16); it significantly reduces memory footprint with better accuracy than posttraining-binarization Mamba baselines. (paper | tweet)