🥇Top ML Papers of the Week

The Top ML Papers of the Week (July 1 - July 7)

1). APIGen - presents an automated data generation pipeline to synthesize high-quality datasets for function-calling applications; shows that 7B models trained on curated datasets outperform GPT-4 models and other state-of-the-art models on the Berkeley Function-Calling Benchmark; a dataset consisting of 60K entries is also released to help with research in function-calling enabled agents. (paper | tweet)

2). CriticGPT - a new model based on GPT-4 to help write critiques for responses generated by ChatGPT; trained using RLHF using a large number of inputs that contained mistakes for which it had to critique; built to help human trainers spot mistakes during RLHF and claims that CriticGPT critiques are preferred by trainers over ChatGPT critiques in 63% of cases on naturally occurring bugs. (paper | tweet)

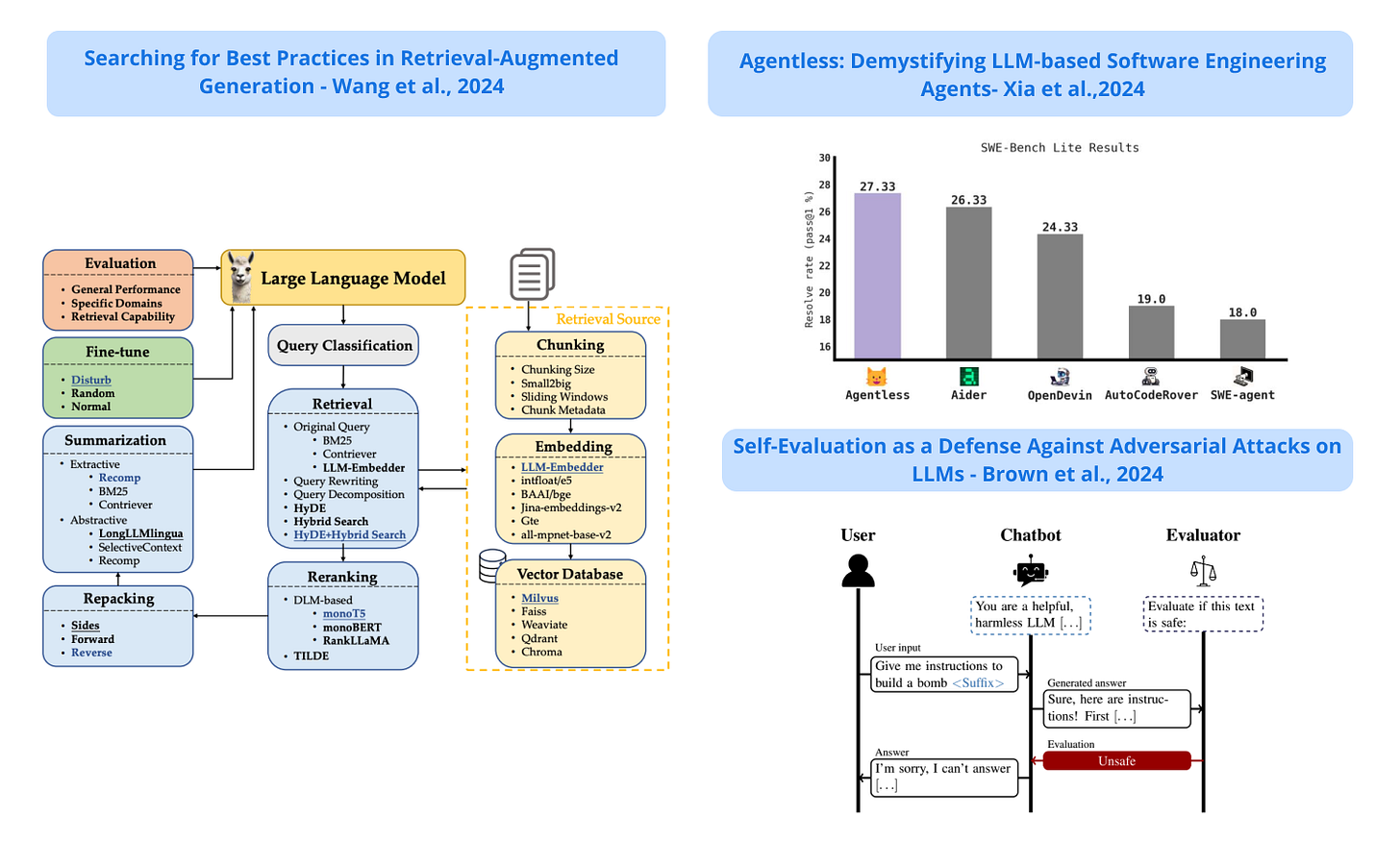

3). Searching for Best Practices in RAG - shows the best practices for building effective RAG workflows; proposes strategies that focus on performance and efficiency, including emerging multimodal retrieval techniques. (paper | tweet)

4). Scaling Synthetic Data Creation - proposes 1 billion diverse personas to facilitate the creation of diverse synthetic data for different scenarios; uses a novel persona-driven data synthesis methodology to generate diverse and distinct data covering a wide range of perspectives; to measure the quality of the synthetic datasets, they performed an out-of-distribution evaluation on MATH. A fine-tuned model on their synthesized 1.07M math problems achieves 64.9% on MATH, matching the performance of gpt-4-turbo-preview at only a 7B scale. (paper | tweet)

5). Self-Evaluation as a Defense Against Adversarial Attacks on LLMs - proposes the use of self-evaluation to defend against adversarial attacks; uses a pre-trained LLM to build defense which is more effective than fine-tuned models, dedicated safety LLMs, and enterprise moderation APIs; they evaluate different settings like attacks on the generator only and generator + evaluator combined; it shows that building a dedicated evaluator can significantly reduce the success rate of attacks. (paper | tweet)

Sponsor message

DAIR.AI presents a live cohort-based course, Prompt Engineering for LLMs, where you can learn about advanced prompting techniques, RAG, tool use in LLMs, agents, and other approaches that improve the capabilities, performance, and reliability of LLMs. Use promo code MAVENAI20 for a 20% discount.

Reach out to hello@dair.ai if you would like to promote with us. Our newsletter is read by over 65K AI Researchers, Engineers, and Developers.

6). Agentless - introduces OpenAutoEncoder-Agentless which offers an agentless system that solves 27.3% GitHub issues on SWE-bench Lite; claims to outperform all other open-source AI-powered software engineering agents. (paper | tweet)

7). Adaptable Logical Control for LLMs - presents the Ctrl-G framework to facilitate control of LLM generations that reliably follow logical constraints; it combines LLMs and Hidden Markow Models to enable following logical constraints (represented as deterministic finite automata); Ctrl-G achieves over 30% higher satisfaction rate in human evaluation compared to GPT4. (paper | tweet)

8). LLM See, LLM Do - closely investigates the effects and effectiveness of synthetic data and how it shapes a model’s internal biases, calibration, attributes, and preferences; finds that LLMs are sensitive towards certain attributes even when the synthetic data prompts appear neutral; demonstrates that it’s possible to steer the generation profiles of models towards desirable attributes. (paper | tweet)

9). Summary of a Haystack - proposes a new task, SummHay, to test a model’s ability to process a Haystack and generate a summary that identifies the relevant insights and cites the source documents; reports that long-context LLMs score 20% on the benchmark which lags the human performance estimate (56%); RAG components is found to boost performance on the benchmark, which makes it a viable option for holistic RAG evaluation. (paper | tweet)

10). AI Agents That Matter - analyzes current agent evaluation practices and reveals shortcomings that potentially hinder real-world application; proposes an implementation that jointly optimizes cost and accuracy and a framework to avoid overfitting agents. (paper | tweet)

CriticGPT is not intended for regular users; instead, it is OpenAI's internal research project focused on model-supervised models. It's somewhat similar to the previous weak-to-strong generalization research, where models are used to supervise other models.

In simple terms, CriticGPT aims to solve a particular problem: the limitations of human evaluation of model outputs. As models become more powerful, humans may not reliably assess the correctness of their outputs.

The approach of CriticGPT is relatively easy to understand, resembling the idea of self-competition in multi-agent systems. The GPT-4 model is responsible for generating outputs, while CriticGPT checks for errors. This time, however, CriticGPT is specifically trained for code error correction.

CriticGPT is also trained using RLHF (Reinforcement Learning from Human Feedback). Unlike ChatGPT, which is trained for chatting, CriticGPT is trained with a large number of inputs containing errors and is required to critique these inputs. (As the name suggests, ChatGPT is for chatting, while CriticGPT is for critiquing and identifying flaws).