🥇Top ML Papers of the Week

The Top ML Papers of the Week (April 15 - April 21)

1). Llama 3 - a family of LLMs that include 8B and 70B pretrained and instruction-tuned models; Llama 3 8B outperforms Gemma 7B and Mistral 7B Instruct; Llama 3 70 broadly outperforms Gemini Pro 1.5 and Claude 3 Sonnet. (paper | tweet)

2). Mixtral 8x22B - a new open-source sparse mixture-of-experts model that reports that compared to the other community models, it delivers the best performance/cost ratio on MMLU; shows strong performance on reasoning, knowledge retrieval, maths, and coding. (paper | tweet)

3). Chinchilla Scaling: A replication attempt - attempts to replicate the third estimation procedure of the compute-optimal scaling law proposed in Hoffmann et al. (2022) (i.e., Chinchilla scaling); finds that “the reported estimates are inconsistent with their first two estimation methods, fail at fitting the extracted data, and report implausibly narrow confidence intervals.” (paper | tweet)

4). How Faithful are RAG Models? - aims to quantify the tug-of-war between RAG and LLMs' internal prior; it focuses on GPT-4 and other LLMs on question answering for the analysis; finds that providing correct retrieved information fixes most of the model mistakes (94% accuracy); when the documents contain more incorrect values and the LLM's internal prior is weak, the LLM is more likely to recite incorrect information; the LLMs are found to be more resistant when they have a stronger prior. (paper | tweet)

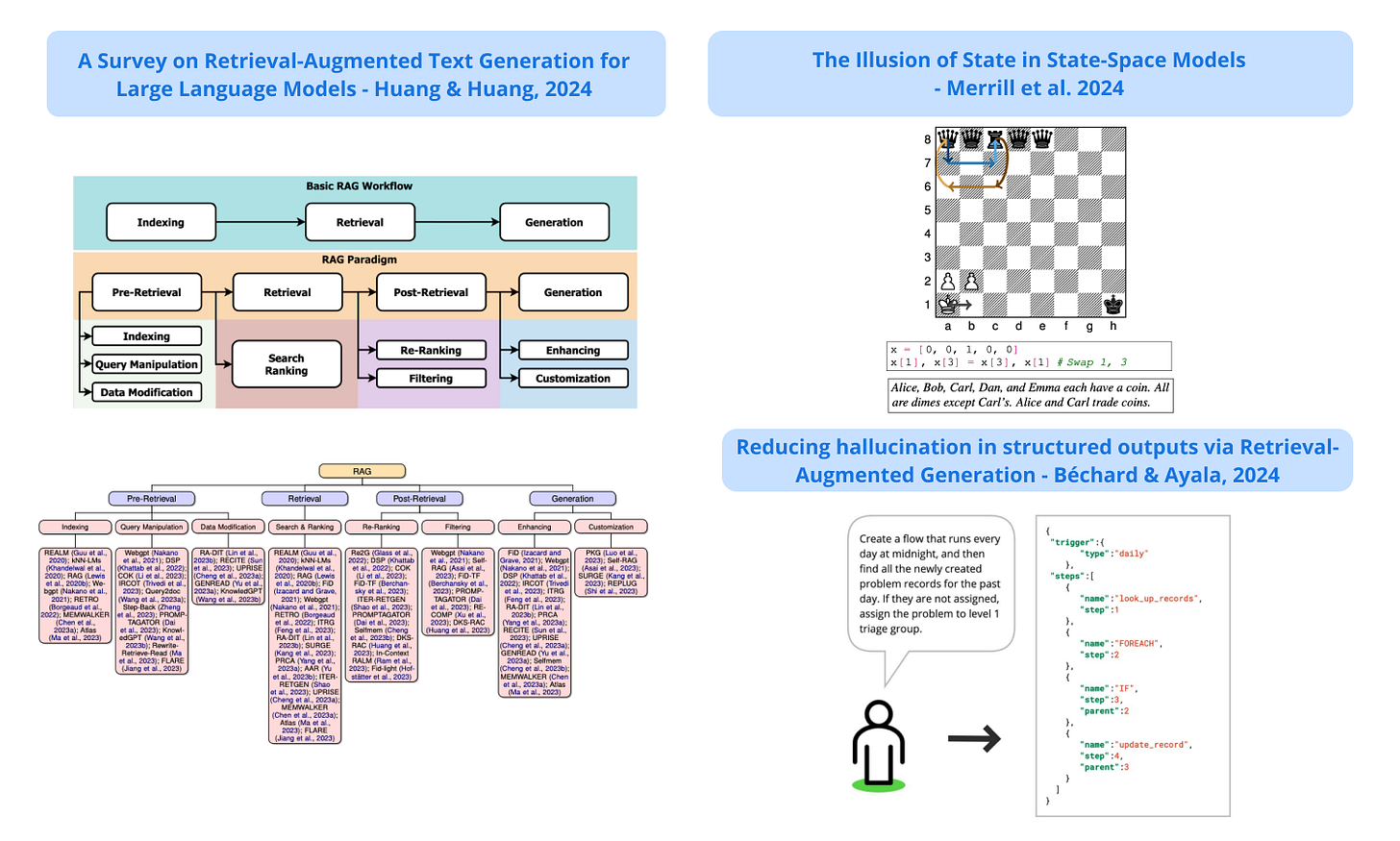

5). A Survey on Retrieval-Augmented Text Generation for LLMs - presents a comprehensive overview of the RAG domain, its evolution, and challenges; it includes a detailed discussion of four important aspects of RAG systems: pre-retrieval, retrieval, post-retrieval, and generation. (paper | tweet)

6). The Illusion of State in State-Space Models - investigates the expressive power of state space models (SSMs) and reveals that it is limited similar to transformers in that SSMs cannot express computation outside the complexity class 𝖳𝖢^0; finds that SSMs cannot solve state-tracking problems like permutation composition and other tasks such as evaluating code or tracking entities in a long narrative. (paper | tweet)

7). Reducing Hallucination in Structured Outputs via RAG - discusses how to deploy an efficient RAG system for structured output tasks; the RAG system combines a small language model with a very small retriever; it shows that RAG can enable deploying powerful LLM-powered systems in limited-resource settings while mitigating issues like hallucination and increasing the reliability of outputs. (paper | tweet)

8). Emerging AI Agent Architectures - presents a concise summary of emerging AI agent architectures; it focuses the discussion on capabilities like reasoning, planning, and tool calling which are all needed to build complex AI-powered agentic workflows and systems; the report includes current capabilities, limitations, insights, and ideas for future development of AI agent design. (paper | tweet)

9). LLM In-Context Recall is Prompt Dependent - analyzes the in-context recall performance of different LLMs using several needle-in-a-haystack tests; shows various LLMs recall facts at different lengths and depths; finds that a model's recall performance is significantly affected by small changes in the prompt; the interplay between prompt content and training data can degrade the response quality; the recall ability of a model can be improved with increasing size, enhancing the attention mechanism, trying different training strategies, and applying fine-tuning. (paper | tweet)

10). A Survey on State Space Models - a survey paper on state space models (SSMs) with experimental comparison and analysis; it reviews current SSMs, improvements compared to alternatives, challenges, and their applications. (paper | tweet)

Reach out to hello@dair.ai if you would like to sponsor or promote with us. Our newsletter is read by over 50K AI Researchers, Engineers, and Developers.