🥇Top ML Papers of the Week

The top ML Papers of the Week (Jan 30 - Feb 5)

In this issue, we cover the top ML Papers of the Week (Jan 30 - Feb 5).

1). REPLUG - a retrieval-augmented LM framework that adapts a retriever to a large-scale, black-box LM like GPT-3. (Paper)

2). Extracting Training Data from Diffusion Models - shows that diffusion-based generative models can memorize images from the training data and emit them at generation time. (Paper)

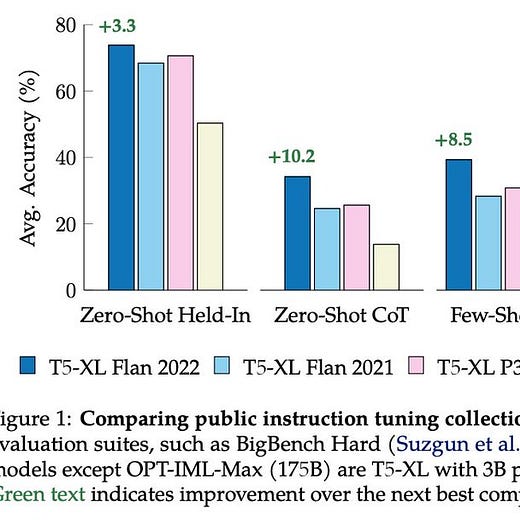

3). The FLAN Collection - release a more extensive publicly available collection of tasks, templates, and methods to advancing instruction-tuned models. (Paper)

4). Multimodal Chain-of-Thought Reasoning - incorporates vision features to elicit chain-of-thought reasoning in multimodality, enabling the model to generate effective rationales that contribute to answer inference. (Paper)

5). Dreamix - a diffusion model that performs text-based motion and appearance editing of general videos. (Paper)

6). Benchmarking LLMs for news summarization. (Paper)

7). Mathematical Capabilities of ChatGPT - investigates the mathematical capabilities of ChatGPT on a new holistic benchmark called GHOSTS. (Paper)

8). Training ‘Blind’ Agents - trains an AI agent to navigate purely by feeling its way around; no use of vision, audio, or any other sensing (as in animals). (Paper)

9). SceneDreamer - a generative model that synthesizes large-scale 3D landscapes from random noises. (Paper)

10). LLMs and irrelevant context - finds that many prompting techniques fail when presented with irrelevant context for arithmetic reasoning. (Paper)

See you next week for another round of awesome ML papers!