🥇Top ML Papers of the Week

The top ML Papers of the Week (Feb 6 - Feb 12)

In this issue, we cover the top ML Papers of the Week (Feb 6 - Feb 12).

1). Toolformer - introduces language models that teach themselves to use external tools via simple API calls. (paper)

2). Describe, Explain, Plan, and Select - proposes using language models for open-world game playing. (paper)

3). A Categorical Archive of ChatGPT Failures - a comprehensive analysis of ChatGPT failures for categories like reasoning, factual errors, maths, and coding. (paper)

4). Hard Prompts Made Easy - optimizing hard text prompts through efficient gradient-based optimization. (paper)

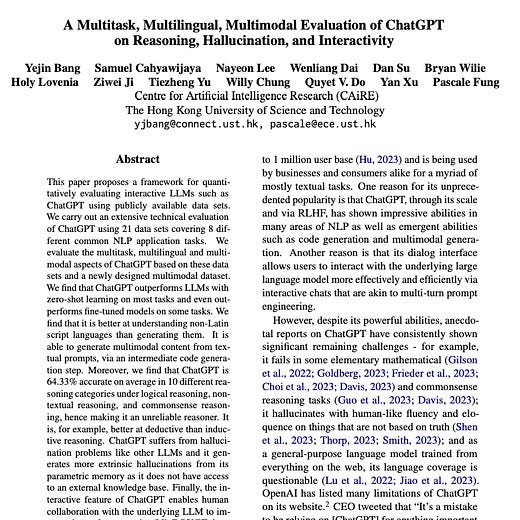

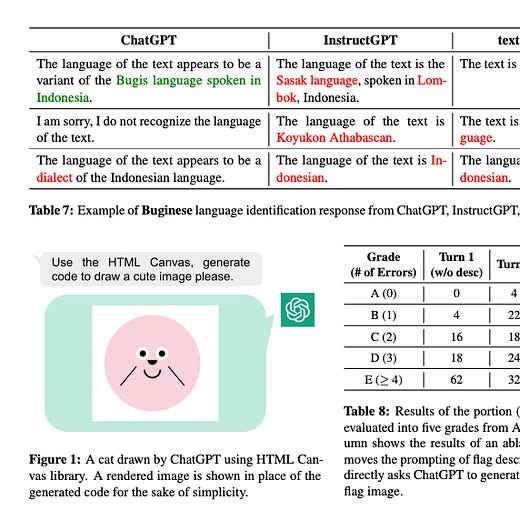

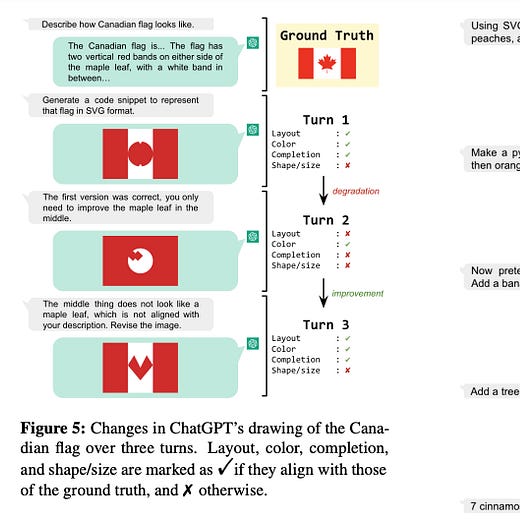

5). Data Selection for LMs - proposes a cheap and scalable data selection framework based on an importance resampling algorithm to improve the downstream performance of LMs. (paper)

6). Gen-1 - proposes an approach for structure and content-guided video synthesis with diffusion models. (paper)

7). Multitask, Multilingual, Multimodal Evaluation of ChatGPT - performs a more rigorous evaluation of ChatGPt on reasoning, hallucination, and interactivity. (paper)

8). Noise2Music - proposes diffusion models to generate high-quality 30-second music clips via text prompts. (paper)

9). Offsite-Tuning - introduces an efficient, privacy-preserving transfer learning framework to adapt foundational models to downstream data without access to the full model. (paper)

10). pix2pix-zero - proposes a model for zero-shot image-to-image translation. (paper)

See you next week for another round of awesome ML papers!