🥇Top ML Papers of the Week

The top ML Papers of the Week (Oct 30 - Nov 5)

1). MetNet-3 - a state-of-the-art neural weather model that extends both the lead time range and the variables that an observation-based model can predict well; learns from both dense and sparse data sensors and makes predictions up to 24 hours ahead for precipitation, wind, temperature, and dew point. (paper | tweet)

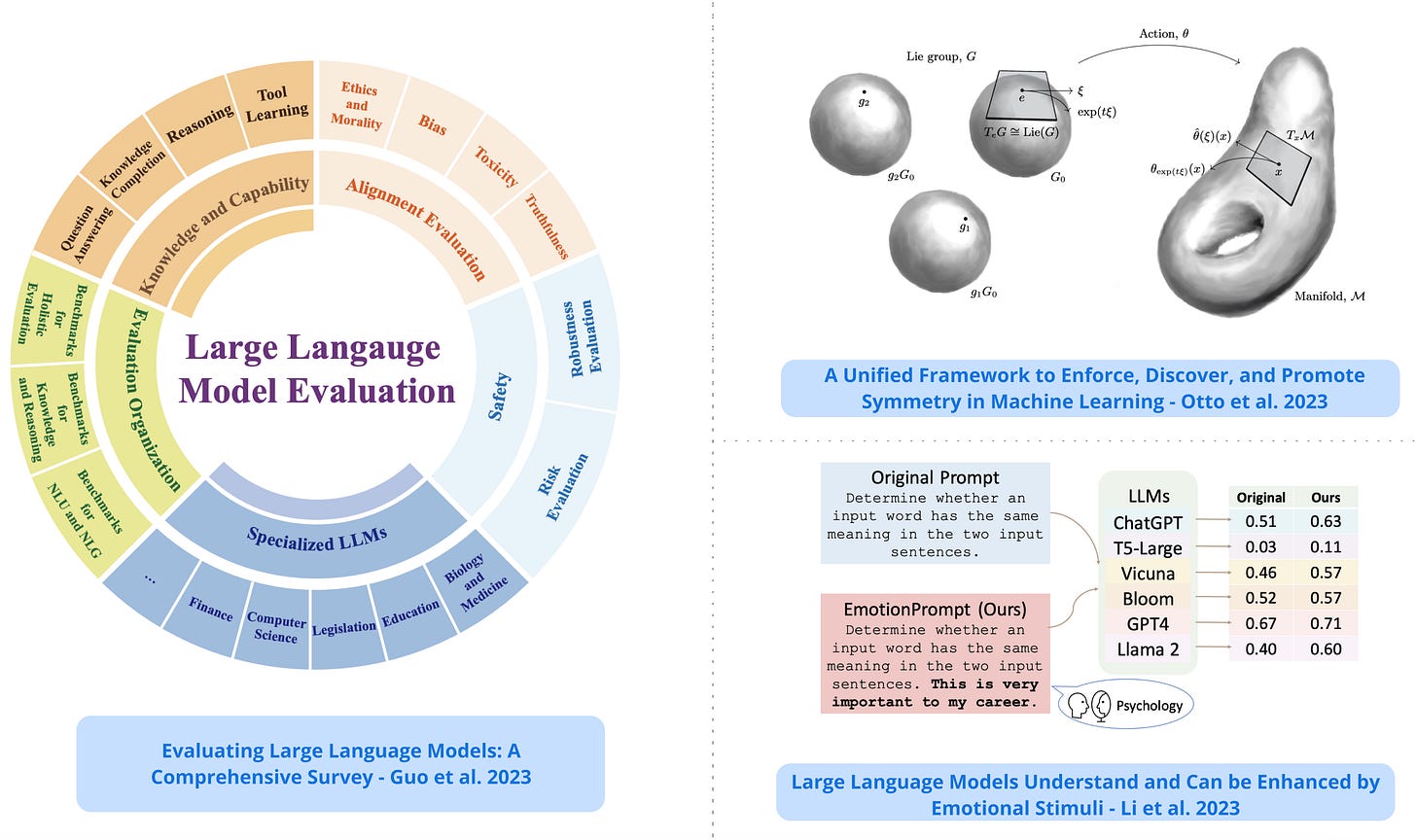

2). Evaluating LLMs - a comprehensive survey (100+ pages) on evaluating LLMs, including discussions about the different types of evaluations, datasets, techniques, and more. (paper | tweet)

3). Battle of the Backbones - a large benchmarking framework for a diverse suite of computer vision tasks; find that while vision transformers (ViTs) and self-supervised learning (SSL) are increasingly popular, convolutional neural networks pretrained in a supervised fashion on large training sets perform best on most tasks. (paper | tweet)

4). LLMs for Chip Design - proposes using LLMs for industrial chip design by leveraging domain adaptation techniques; evaluates different applications for chip design such as assistant chatbot, electronic design automation, and bug summarization; domain adaptation significantly improves performance over general-purpose models on a variety of design tasks; using a domain-adapted LLM for RAG further improves answer quality. (paper | tweet)

5). Efficient Context Window Extension of LLMs - proposes a compute-efficient method for efficiently extending the context window of LLMs beyond what it was pretrained on; extrapolates beyond the limited context of a fine-tuning dataset and models have been reproduced up to 128K context length. (paper | tweet)

Sponsor message

DAIR.AI presents a new cohort-based course, Prompt Engineering for LLMs, that teaches how to effectively use the latest prompt engineering techniques and tools to improve the capabilities, performance, and reliability of LLMs. Enroll here. Feel free to use this 20% discount promo code: MAVENAI20

6). Open DAC 2023 - introduces a dataset consisting of more than 38M density functional theory (DFT) calculations on more than 8,800 MOF materials containing adsorbed CO2 and/or H2O; properties for DAC are identified directly in the dataset; also trains state-of-the-art ML models with the dataset to approximate calculations at the DFT level; can lead to important baseline for future efforts to identify MOFs for a wide range of applications, including DAC. (paper | tweet)

7). Symmetry in Machine Learning - presents a unified and methodological framework to enforce, discover, and promote symmetry in machine learning; also discusses how these ideas can be applied to ML models such as multilayer perceptions and basis function regression. (paper | tweet)

8). Next Generation AlphaFold - reports progress on a new iteration of AlphaFold that greatly expands its range of applicability; shows capabilities of joint structure prediction of complexes including proteins, nucleic acids, small molecules, ions, and modified residue; demonstrates greater accuracy on protein-nucleic acid interactions than specialists predictors. (paper | tweet)

9). Enhancing LLMs by Emotion Stimuli - explores the ability of LLMs to understand emotional stimuli; conducts automatic experiments on 45 tasks using various LLMs, including Flan-T5-Large, Vicuna, Llama 2, BLOOM, ChatGPT, and GPT-4; the tasks span deterministic and generative applications that represent comprehensive evaluation scenarios; experimental results show that LLMs have a grasp of emotional intelligence. (paper | tweet)

10). FP8-LM - finds that when training FP8 LLMs most variables, such as gradients and optimizer states, in LLM training, can employ low-precision data formats without compromising model accuracy and requiring no changes to hyper-parameter. (paper | tweet)