🥇Top ML Papers of the Week

The top ML Papers of the Week (August 21 - August 27)

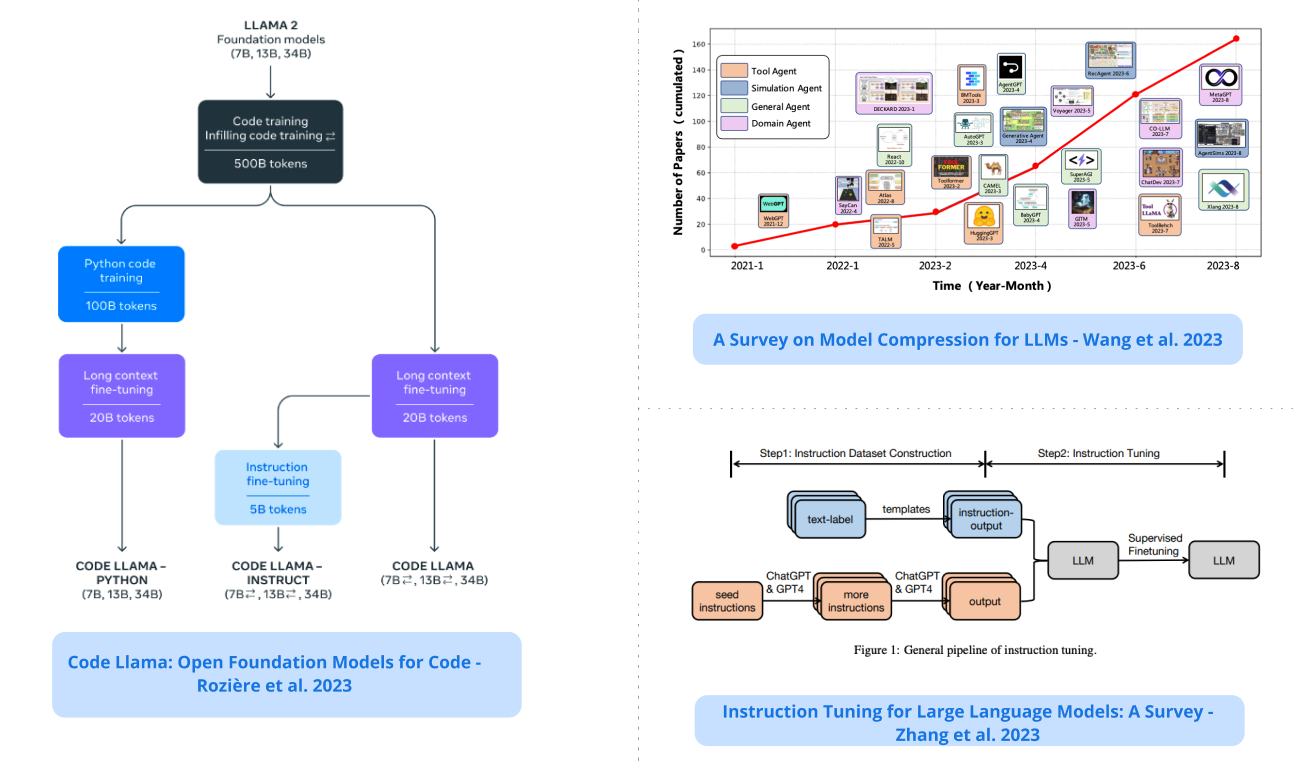

1). Code Llama - a family of LLMs for code based on Llama 2; the models provided as part of this release: foundation base models (Code Llama), Python specializations (Code Llama - Python), Instruction-following models (Code Llama - Instruct); model sizes include 7B, 13B, and 34B. (paper | tweet)

2). Survey on Instruction Tuning for LLMs - new survey paper on instruction tuning LLM, including a systematic review of the literature, methodologies, dataset construction, training models, applications, and more. (paper | tweet)

3). SeamlessM4T - a unified multilingual and multimodal machine translation system that supports ASR, text-to-text translation, speech-to-text translation, text-to-speech translation, and speech-to-speech translation. (paper | tweet)

4). Use of LLMs for Illicit Purposes - provides an overview of existing efforts to identify and mitigate threats and vulnerabilities arising from LLMs; serves as a guide to building more reliable and robust LLM-powered systems. (paper | tweet)

5). Giraffe - a new family of models that are fine-tuned from base Llama and Llama 2; extends the context length to 4K, 16K, and 32K; explores the space of expanding context lengths in LLMs so it also includes insights useful for practitioners and researchers. (paper | tweet)

If you find the newsletter content valuable, consider supporting our efforts through a paid subscription. We love doing this and rely on your support to continue improving things.

6). IT3D - presents a strategy that leverages explicitly synthesized multi-view images to improve Text-to-3D generation; integrates a discriminator along a Diffusion-GAN dual training strategy to guide the training of the 3D models. (paper)

7). A Survey on LLM-based Autonomous Agents - presents a comprehensive survey of LLM-based autonomous agents; delivers a systematic review of the field and a summary of various applications of LLM-based AI agents in domains like social science and engineering. (paper | tweet)

8). Prompt2Model - a new framework that accepts a prompt describing a task through natural language; it then uses the prompt to train a small special-purpose model that is conducive to deployment; the proposed pipeline automatically collects and synthesizes knowledge through three channels: dataset retrieval, dataset generation, and model retrieval. (paper | tweet)

9). LegalBench - a collaboratively constructed benchmark for measuring legal reasoning in LLMs; it consists of 162 tasks covering 6 different types of legal reasoning. (paper | tweet)

10). Language to Rewards for Robotic Skill Synthesis - proposes a new language-to-reward system that utilizes LLMs to define optimizable reward parameters to achieve a variety of robotic tasks; the method is evaluated on a real robot arm where complex manipulation skills such as non-prehensile pushing emerge. (paper | tweet)

Reach out to hello@dair.ai if you would like to sponsor the next issue of the newsletter. We can help promote your AI tool, research, or company to ~10K AI researchers and practitioners.