🥇Top ML Papers of the Week

The top ML Papers of the Week (May 29 - Jun 4)

1). Let’s Verify Step by Step - achieves state-of-the-art mathematical problem solving by rewarding each correct step of reasoning in a chain-of-thought instead of rewarding the final answer; the model solves 78% of problems from a representative subset of the MATH test set. (paper | tweet)

2). No Positional Encodings - shows that explicit position embeddings are not essential for decoder-only Transformers; shows that other positional encoding methods like ALiBi and Rotary are not well suited for length generalization. (paper | tweet)

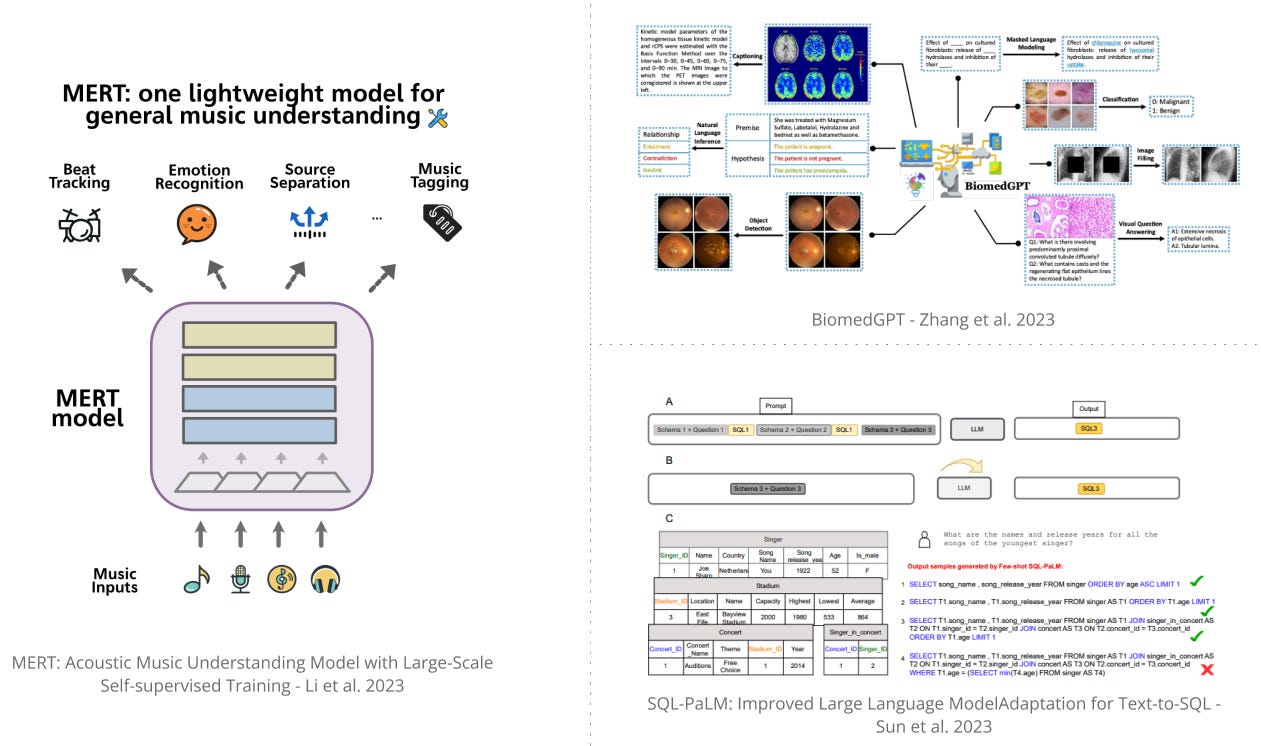

3). BiomedGPT - a unified biomedical generative pretrained transformer model for vision, language, and multimodal tasks. Achieves state-of-the-art performance across 5 distinct tasks with 20 public datasets spanning over 15 unique biomedical modalities. (paper | tweet)

4). Thought Cloning - introduces an imitation learning framework to learn to think while acting; the idea is not only to clone the behaviors of human demonstrators but also the thoughts humans have when performing behaviors. (paper | tweet)

5). Fine-Tuning Language Models with Just Forward Passes - proposes a memory-efficient zeroth-order optimizer and a corresponding SGD algorithm to finetune large LMs with the same memory footprint as inference. (paper | tweet)

6). MERT - an acoustic music understanding model with large-scale self-supervised training; it incorporates a superior combination of teacher models to outperform conventional speech and audio approaches. (paper | tweet)

7). Bytes Are All You Need - investigates performing classification directly on file bytes, without needing to decode files at inference time; achieves ImageNet Top-1 accuracy of 77.33% using a transformer backbone; achieves 95.42% accuracy when operating on WAV files from the Speech Commands v2 dataset. (paper | tweet)

8). Direct Preference Optimization - while helpful to train safe and useful LLMs, the RLHF process can be complex and often unstable; this work proposes an approach to finetune LMs by solving a classification problem on the human preferences data, with no RL required. (paper | tweet)

9). SQL-PaLM - an LLM-based Text-to-SQL adopted from PaLM-2; achieves SoTA in both in-context learning and fine-tuning settings; the few-shot model outperforms the previous fine-tuned SoTA by 3.8% on the Spider benchmark; few-shot SQL-PaLM also outperforms few-shot GPT-4 by 9.9%, using a simple prompting approach. (paper | tweet)

10). CodeTF - an open-source Transformer library for state-of-the-art code LLMs; supports pretrained code LLMs and popular code benchmarks, including standard methods to train and serve code LLMs efficiently. (paper | tweet)

CodeTF has really peaked my interest.