🥇Top ML Papers of the Week

The Top ML Papers of the Week (January 6 - 12)

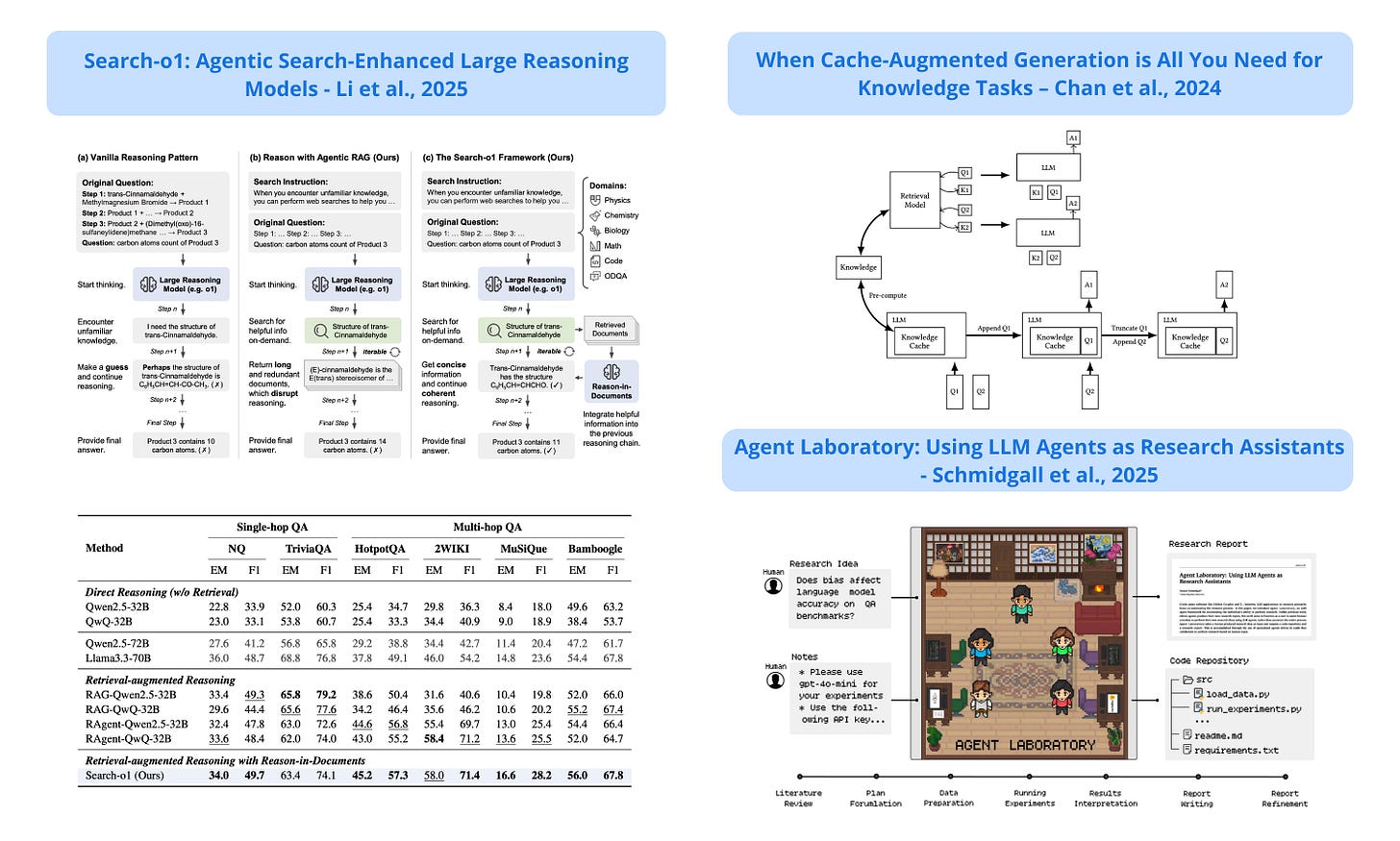

1). Cache-Augmented Generation (CAG) - an approach that aims to leverage the capabilities of long-context LLMs by preloading the LLM with all relevant docs in advance and precomputing the key-value (KV) cache; the preloaded context helps the model to provide contextually accurate answers without the need for additional retrieval during runtime; the authors suggest that CAG is a useful alternative to RAG for cases where the documents/knowledge for retrieval are of limited, manageable size. (paper | tweet)

2). Agent Laboratory - an approach that leverages LLM agents capable of completing the entire research process; the main findings are: 1) agents driven by o1-preview resulted in the best research outcomes, 2) generated machine learning code can achieve state-of-the-art performance compared to existing methods, 3) human feedback further improves the quality of research, and 4) Agent Laboratory significantly reduces research expenses. (paper | tweet)

Editor Message

Learn how to build with AI agents for various use cases in our new AI Agents course. Use code AGENT20 for a 20% discount.

3). Long Context vs. RAG for LLMs - performs a comprehensive evaluation of long context (LC) LLMs compared to RAG systems; the three main findings are: 1) LC generally outperforms RAG in question-answering benchmarks, 2) summarization-based retrieval performs comparably to LC, while chunk-based retrieval lags behind, and 3) RAG has advantages in dialogue-based and general question queries (paper | tweet)

4). Search-o1 - a framework that combines large reasoning models (LRMs) with agentic search and document refinement capabilities to tackle knowledge insufficiency; the framework enables autonomous knowledge retrieval during reasoning and demonstrates strong performance across complex tasks, outperforming both baseline models and human experts. (paper | tweet)

5). Towards System 2 Reasoning - proposes Meta Chain-of-Thought (Meta-CoT), which extends traditional Chain-of-Thought (CoT) by modeling the underlying reasoning required to arrive at a particular CoT; the main argument is that CoT is naive and Meta-CoT gets closer to the cognitive process required for advanced problem-solving. (paper | tweet)

6). rStar-Math - a new approach proposes three core components to enhance math reasoning: 1) a code-augmented CoT data synthesis method involving MCTS to generate step-by-step verified reasoning trajectories which are used to train the policy SLM, 2) an SLM-based process reward model that reliably predicts a reward label for each math reasoning step, and 3) a self-evolution recipe where the policy SLM and PPM are iteratively evolved to improve math reasoning; on the MATH benchmark, rStar-Math improves Qwen2.5-Math-7B from 58.8% to 90.0% and Phi3-mini-3.8B from 41.4% to 86.4%, surpassing o1-preview by +4.5% and +0.9%. (paper | tweet)

7). Cosmos World Foundation Model - a framework for training Physical AI systems in digital environments before real-world deployment; the platform includes pre-trained world foundation models that act as digital twins of the physical world, allowing AI systems to safely learn and interact without risking damage to physical hardware; these models can be fine-tuned for specific applications like camera control, robotic manipulation, and autonomous driving. (paper | tweet)

8). Process Reinforcement through Implicit Rewards - a framework for online reinforcement learning that uses process rewards to improve language model reasoning; the proposed algorithm combines online prompt filtering, RLOO return/advantage estimation, PPO loss, and implicit process reward modeling online updates; on their model, Eurus-2-7B-PRIME, achieves 26.7% pass@1 on AIME 2024, surpassing GPT-4 and other models, using only 1/10 of the training data compared to similar models. (paper | tweet)

9). Can LLMs Design Good Questions? - systematically evaluates the quality of questions generated with LLMs; here are the main findings: 1) there is a strong preference for asking about specific facts and figures in both LLaMA and GPT models, 2) the question lengths tend to be around 20 words but different LLMs tend to exhibit distinct preferences for length, 3) LLM-generated questions typically require significantly longer answers, and 4) human-generated questions tend to concentrate on the beginning of the context while LLM-generated questions exhibit a more balanced distribution, with a slight decrease in focus at both ends. (paper | tweet)

10). A Survey on LLMs - a new survey on LLMs including some insights on capabilities and limitations. (paper | tweet)