🥇Top AI Papers of the Week: o3-mini, Qwen2.5-1M, TensorLLM, TokenVerse, Diverse Preference Optimization

The Top AI Papers of the Week (January 27 - February 2)

1). o3-mini

OpenAI has launched o3-mini, their newest cost-efficient reasoning model, available in ChatGPT and API. The model excels in STEM-related tasks, particularly in science, math, and coding, while maintaining the low cost and reduced latency of its predecessor o1-mini. It introduces key developer features like function calling, Structured Outputs, and developer messages, making it production-ready from launch.

o3-mini includes different reasoning effort levels (low, medium, and high) and improves performance across a wide range of tasks. It delivered responses 24% faster than o1-mini and achieved notable results in competition math, PhD-level science questions, and software engineering tasks.

System Card | Blog | Tweet

2). Qwen2.5-1M

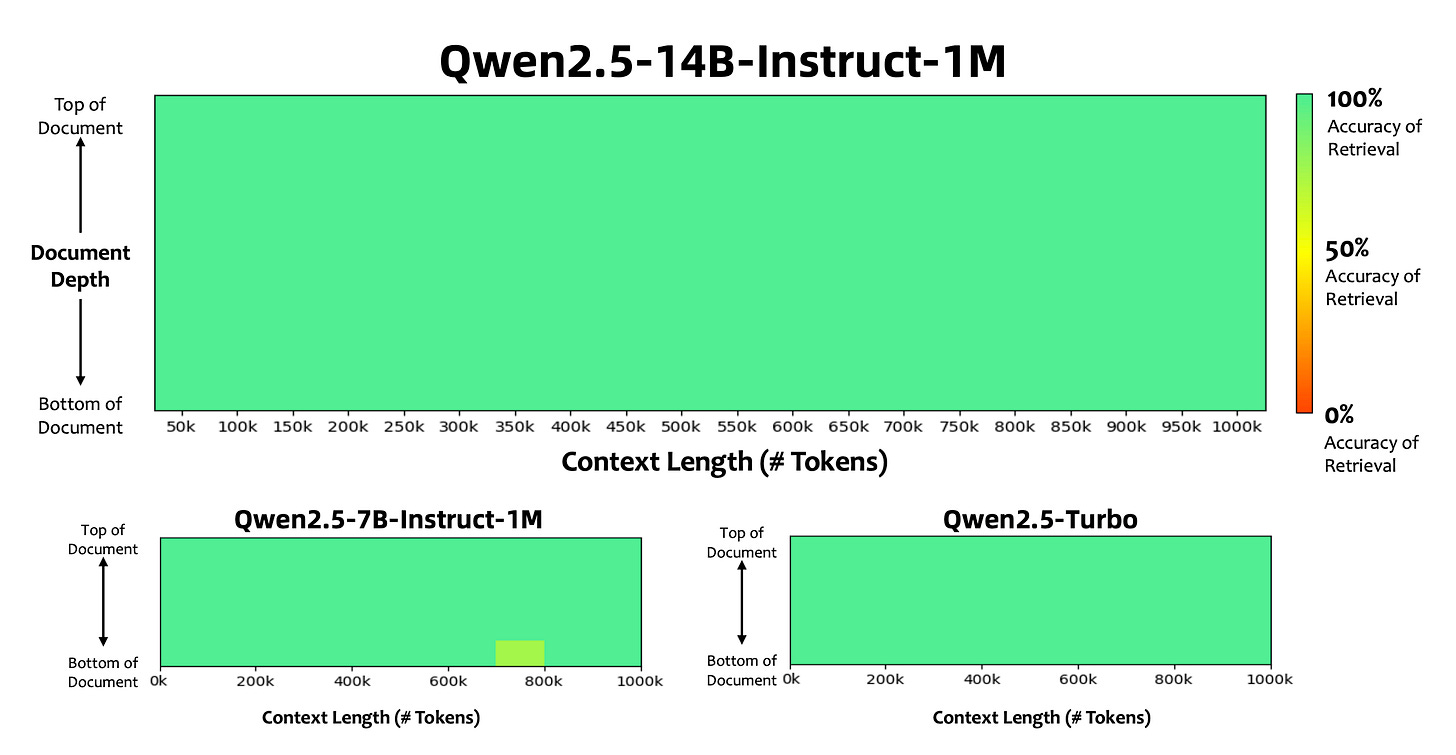

Qwen releases two open-source LLMs, Qwen2.5-7B-Instruct-1M and Qwen2.5-14B-Instruct-1M, that can handle context lengths of up to 1 million tokens.

The models are built on a progressive training approach, starting with 4K tokens and gradually increasing to 256K tokens, then using length extrapolation techniques to reach 1M tokens. They've also released an inference framework based on vLLM that processes long inputs 3-7x faster through sparse attention methods.

The models show strong performance on both long-context and short-text tasks. The 14B model outperforms GPT-4o-mini across multiple long-context datasets while maintaining similar performance on shorter tasks.

Paper | Models | Qwen Chat App | Tweet

3). Janus-Pro

An enhanced version of the previous Janus model for multimodal understanding and generation. The model incorporates three key improvements: optimized training strategies with longer initial training and focused fine-tuning, expanded training data including 90 million new samples for understanding and 72 million synthetic aesthetic samples for generation, and scaling to larger model sizes up to 7B parameters.

Janus-Pro achieves significant improvements in both multimodal understanding and text-to-image generation capabilities. The model outperforms existing solutions on various benchmarks, scoring 79.2 on MMBench for understanding tasks and achieving 80% accuracy on GenEval for text-to-image generation. The improvements also enhance image generation stability and quality, particularly for short prompts and fine details, though the current 384x384 resolution remains a limitation for certain tasks.

4). On the Underthinking of o1-like LLMs

This work looks more closely at the "thinking" patterns of o1-like LLMs. We have seen a few recent papers pointing out the issues with overthinking.

There is now a new phenomenon called underthinking! What is it about? The authors find that o1-like LLMs frequently switch between different reasoning thoughts without sufficiently exploring promising paths to reach a correct solution.

5). Diverse Preference Optimization

Introduces Diverse Preference Optimization (DivPO), a novel training method that aims to address the lack of diversity in language model outputs while maintaining response quality. The key challenge is that current preference optimization techniques like RLHF tend to sharpen the output probability distribution, causing models to generate very similar responses. This is particularly problematic for creative tasks where varied outputs are desired.

DivPO works by modifying how training pairs are selected during preference optimization. Rather than simply choosing the highest and lowest rewarded responses, DivPO selects the most diverse response that meets a quality threshold and contrasts it with the least diverse response below a threshold. The method introduces a diversity criterion that can be measured in different ways, including model probability, word frequency, or using an LLM as a judge. Experiments on persona generation and creative writing tasks show that DivPO achieves up to 45.6% more diverse outputs in structured tasks and an 81% increase in story diversity, while maintaining similar quality levels compared to baseline methods.

6). Usage Recommendation for DeepSeek-R1

This work provides a set of recommendations for how to prompt the DeepSeek-R1 model. Below are the key guidelines:

Prompt Engineering:

Use clear, structured prompts with explicit instructions

Avoid few-shot prompting; use zero-shot instead

Output Formatting:

Specify the desired format (JSON, tables, markdown)

Request step-by-step explanations for reasoning tasks

Language:

Explicitly specify input/output language to prevent mixing

The paper also summarizes when to use the different model variants, when to fine-tune, and other safety considerations.

7). Docling

Docling is an open-source toolkit that can parse several types of popular document formats into a unified, richly structured representation.

8). Improving RAG through Multi-Agent RL

This work treats RAG as a multi-agent cooperative task to improve answer generation quality. It models RAG components like query rewriting, document selection, and answer generation as reinforcement learning agents working together toward generating accurate answers. It applies Multi-Agent Proximal Policy Optimization (MAPPO) to jointly optimize all agents with a shared reward based on answer quality.

Besides improvements on popular benchmarks, the framework shows strong generalization capabilities in out-of-domain scenarios and maintains effectiveness across different RAG system configurations.

9). TensorLLM

Proposes a framework that performs MHA compression through a multi-head tensorisation process and the Tucker decomposition. Achieves a compression rate of up to ∼ 250x in the MHA weights, without requiring any additional data, training, or fine-tuning.

10). TokenVerse

Proposes a new technique to generate new images from learned concepts in a desired configuration. Proposed by Google DeepMind and collaborators, TokenVerse enables multi-concept personalization by leveraging a pre-trained text-to-image diffusion model to disentangle and extract complex visual concepts from multiple images.

It operates in the modulation space of DiTs, learning a personalized modulation vector for each text token in an input caption. This allows flexible and localized control over distinct concepts such as objects, materials, lighting, and poses. The learned token modulations can then be combined in novel ways to generate new images that integrate multiple personalized concepts without requiring additional segmentation masks.