🥇Top AI Papers of the Week

The Top AI Papers of the Week (April 28 - May 4)

1. Phi-4-Mini-Reasoning

Microsoft released Phi-4-Mini-Reasoning to explore small reasoning language models for math. Highlights:

Phi-4-Mini-Reasoning: The paper introduces Phi-4-Mini-Reasoning, a 3.8B parameter small language model (SLM) that achieves state-of-the-art mathematical reasoning performance, rivaling or outperforming models nearly twice its size.

Unlocking Reasoning: They use a systematic, multi-stage training pipeline to unlock strong reasoning capabilities in compact models, addressing the challenges posed by their limited capacity. Uses large-scale distillation, preference learning, and RL with verifiable rewards.

Four-Stage Training Pipeline: The model is trained using (1) mid-training with large-scale long CoT data, (2) supervised fine-tuning on high-quality CoT data, (3) rollout-based Direct Preference Optimization (DPO), and (4) RL using verifiable reward signals.

Math Performance: On MATH-500, Phi-4-Mini-Reasoning reaches 94.6%, surpassing DeepSeek-R1-Distill-Qwen-7B (91.4%) and DeepSeek-R1-Distill-Llama-8B (86.9%), despite being smaller.

Verifiable Reward Reinforcement Learning: The final RL stage, tailored for small models, includes prompt filtering, oversampling for balanced training signals, and temperature annealing. This improves training stability and aligns exploration with evaluation conditions.

Massive Synthetic Data Generation: The model is mid-trained on 10M CoT rollouts generated by DeepSeek-R1, filtered for correctness using math verifiers and GPT-4o-mini, and categorized by domain and difficulty to ensure broad generalization.

Ablation Study: Each phase of the pipeline shows clear gains. Notably, fine-tuning and RL each deliver ~5–7 point improvements after mid-training and DPO, showing the value of the full pipeline over isolated techniques.

2. Building Production-Ready AI Agents with Scalable Long-Term Memory

This paper proposes a memory-centric architecture for LLM agents to maintain coherence across long conversations and sessions, solving the fixed-context window limitation. Main highlights:

The solution introduces two systems: Mem0, a dense, language-based memory system, and Mem0g, an enhanced version with graph-based memory to model complex relationships. Both aim to extract, consolidate, and retrieve salient facts over time efficiently.

Mem0: Uses a two-stage architecture (extraction & update) to maintain salient conversational memories. It detects redundant or conflicting information and manages updates using tool-calls, resulting in a lightweight, highly responsive memory store (7K tokens per conversation).

Mem0g: By structuring memory as a knowledge graph of entities and relationships, Mem0g improves performance in tasks needing temporal and relational reasoning (e.g., event ordering, preference tracking) while maintaining reasonable latency and memory cost (14K tokens/convo).

Benchmarking on LOCOMO: Both systems were evaluated against six memory system baselines (e.g., A-Mem, OpenAI, Zep, LangMem, RAG). Mem0g achieves the best overall LLM-as-a-Judge (J) score of 68.44%, outperforming all RAG and memory baselines by 7–28% in J and reducing p95 latency by 91% over full-context methods.

Latency and efficiency: Mem0 achieves the lowest search and total latencies (p95 = 1.44s), and Mem0g still outperforms other graph-based or RAG systems by large margins in speed and efficiency. Great for real-time deployments.

Use-case strengths:

Mem0 excels at single-hop and multi-hop questions using dense, fast memories.

Mem0g surpasses all on temporal and open-domain questions, thanks to its relational modeling

Mem0 and Mem0g offer a scalable memory architecture for long-term LLM agents to improve factual recall, reasoning depth, and efficiency, making them id

Editor Message:

We are excited to announce our new live course on building advanced AI agents.

Use code AGENTS30 for a 30% discount. (Limited time offer)

3. UniversalRAG

UniversalRAG is a framework that overcomes the limitations of existing RAG systems confined to single modalities or corpora. It supports retrieval across modalities (text, image, video) and at multiple granularities (e.g., paragraph vs. document, clip vs. video). Contributions from the paper:

Modality-aware routing: To counter modality bias in unified embedding spaces (where queries often retrieve same-modality results regardless of relevance), UniversalRAG introduces a router that dynamically selects the appropriate modality (e.g., image vs. text) for each query.

Granularity-aware retrieval: Each modality is broken into granularity levels (e.g., paragraphs vs. documents for text, clips vs. full-length videos). This allows queries to retrieve content that matches their complexity -- factual queries use short segments while complex reasoning accesses long-form data.

Flexible routing: It supports both training-free (zero-shot GPT-4o prompting) and trained (T5-Large) routers. Trained routers perform better on in-domain data, while GPT-4o generalizes better to out-of-domain tasks. An ensemble router combines both for robust performance.

Performance: UniversalRAG outperforms modality-specific and unified RAG baselines across 8 benchmarks spanning text (e.g., MMLU, SQuAD), image (WebQA), and video (LVBench, VideoRAG). With T5-Large, it achieves the highest average score across modalities.

Case study: In WebQA, UniversalRAG correctly routes a visual query to the image corpus (retrieving an actual photo of the event), while TextRAG and VideoRAG fail. Similarly, on HotpotQA and LVBench, it chooses the right granularity, retrieving documents or short clips. Overall, this is a great paper showing the importance of considering modality and granularity in a RAG system.

4. DeepSeek-Prover-V2

DeepSeek-Prover-V2 is an LLM (671B) that significantly advances formal theorem proving in Lean 4. The model is built through a novel cold-start training pipeline that combines informal chain-of-thought reasoning with formal subgoal decomposition, enhanced through reinforcement learning. It surpasses prior state-of-the-art on multiple theorem-proving benchmarks. Key highlights:

Cold-start data via recursive decomposition: The authors prompt DeepSeek-V3 to generate natural-language proof sketches, decompose them into subgoals, and formalize these steps in Lean with

sorryplaceholders. A 7B prover model then recursively fills in the subgoal proofs, enabling efficient construction of complete formal proofs and training data.Curriculum learning + RL: A subgoal-based curriculum trains the model on increasingly complex problems. Reinforcement learning with a consistency reward is used to enforce alignment between proof structure and CoT decomposition, improving performance on complex tasks.

Dual proof generation modes: The model is trained in two modes, non-CoT (efficient, minimal proofs) and CoT (high-precision, interpretable). The CoT mode yields significantly better performance, particularly on hard problems.

Benchmark results:

MiniF2F-test: Achieves 88.9% pass@8192, outperforming prior models like Kimina-Prover and STP.

PutnamBench: Solves 49/658 problems, with an additional 13 solved uniquely by the 7B variant.

ProofNet-test: Reaches 37.1% pass@1024, showing strong generalization from high school to undergraduate-level problems.

ProverBench (new): Solves 6/15 formalized AIME 24&25 problems and achieves 59.1% overall pass rate on the full 325-problem set, narrowing the gap with DeepSeek-V3’s informal reasoning on the same tasks.

5. Kimi-Audio

Kimi-Audio is a new open-source audio foundation model built for universal audio understanding, generation, and speech conversation. The model architecture uses a hybrid of discrete semantic audio tokens and continuous Whisper-derived acoustic features.

It is initialized from a pre-trained LLM and trained on 13M+ hours of audio, spanning speech, sound, and music. It also supports a streaming detokenizer with chunk-wise decoding and a novel look-ahead mechanism for smoother audio generation. Extensive benchmarking shows that Kimi-Audio outperforms other audio LLMs across multiple modalities and tasks.

Key highlights:

Architecture: Kimi-Audio uses a 12.5Hz semantic tokenizer and an LLM with dual heads (text + audio), processing hybrid input (discrete + continuous). The audio detokenizer employs a flow-matching upsampler with BigVGAN vocoder for real-time speech synthesis.

Massive Training Corpus: Pretrained on 13M+ hours of multilingual, multimodal audio. A rigorous preprocessing pipeline adds speech enhancement, diarization, and transcription using Whisper and Paraformer-Zh. Fine-tuning uses 300K+ hours from 30+ open datasets.

Multitask Training: Training spans audio-only, text-only, ASR, TTS, and three audio-text interleaving strategies. Fine-tuning is instruction-based, with both audio/text instructions injected via zero-shot TTS.

Evaluation: On ASR (e.g., LibriSpeech test-clean: 1.28 WER), audio understanding (CochlScene: 80.99), and audio-to-text chat (OpenAudioBench avg: 69.8), Kimi-Audio sets new SOTA results, beating Qwen2.5-Omni and Baichuan-Audio across the board.

6. MiMo-7B

Xiaomi releases MiMo-7B, a new language model for reasoning tasks. MiMo-7B is explicitly designed for advanced reasoning across math and code. Highlights:

MiMo-7B: MiMo-7B narrows the capability gap with larger 32B-class models through careful pretraining & posttraining. MiMo-7B-Base is trained from scratch on 25T tokens, with a 3-stage mixture skewed toward mathematics and code (70% in stage 2).

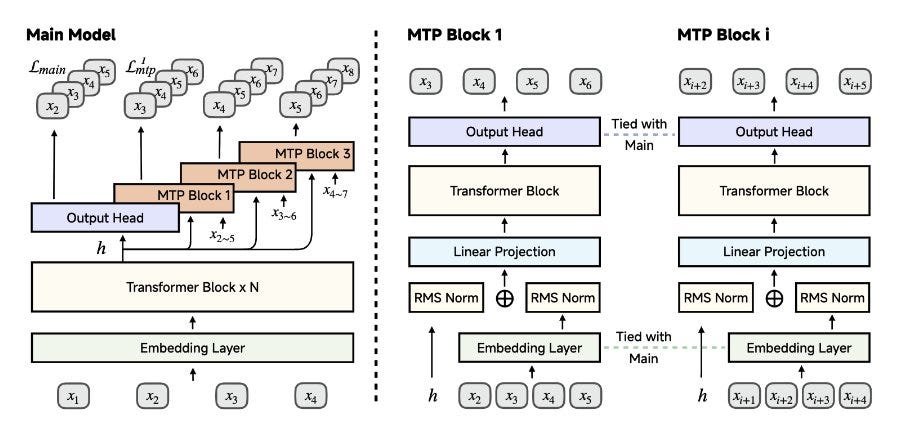

Pre-Training: The team improves HTML and PDF extraction to better preserve STEM data, leverages LLMs to generate diverse synthetic reasoning content, and adds a Multi-Token Prediction (MTP) objective that boosts both quality and inference speed.

Base Performance: MiMo-7B-Base outperforms other 7B–9B models like Qwen2.5, Gemma-2, and Llama-3.1 across BBH (+5 pts), AIME24 (+22.8 pts), and LiveCodeBench (+27.9 pts). On BBH and LiveCodeBench, it even beats larger models on reasoning-heavy tasks.

RL: MiMo-7B-RL is trained with a test difficulty–driven reward function and easy-data resampling to tackle sparse-reward issues and instabilities. In some cases, it surpasses o1-mini on math & code. RL from the SFT model reaches higher ceilings than RL-Zero from the base.

Efficient infrastructure: A Seamless Rollout Engine accelerates RL by 2.29× and validation by 1.96× using continuous rollout, async reward computation, and early termination. MTP layers enable fast speculative decoding, with 90%+ acceptance rates in inference.

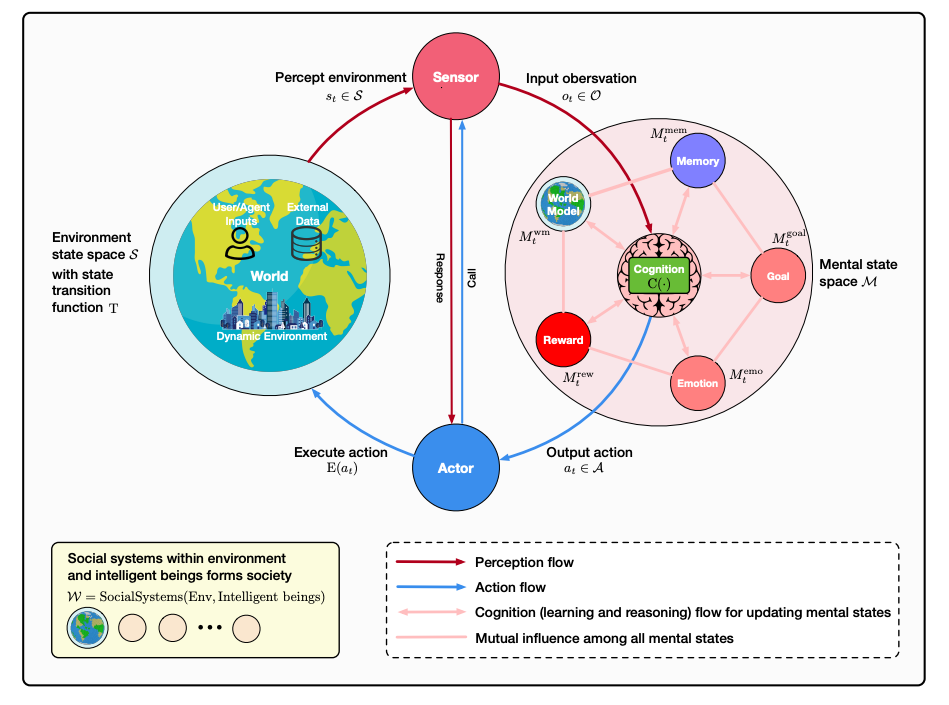

7. Advances and Challenges in Foundation Agents

A new survey frames intelligent agents with a modular, brain-inspired architecture that integrates ideas from cognitive science, neuroscience, and computational research. Key topics covered:

Human Brain and LLM Agents: Helps to better understand what differentiates LLM agents from human/brain cognition, and what inspirations we can get from the way humans learn and operate.

Definitions: Provides a nice, detailed, and formal definition of what makes up an AI agent.

Reasoning: It has a detailed section on the core components of intelligent agents. There is a deep dive into reasoning, which is one of the key development areas of AI agents and what unlocks things like planning, multi-turn tooling, backtracking, and much more.

Memory: Agent memory is a challenging area of building agentic systems, but there is already a lot of good literature out there from which to get inspiration.

Action Systems: You can already build very complex agentic systems today, but the next frontier is agents that take actions and make decisions in the real world. We need better tooling, better training algorithms, and robust operation in different action spaces.

Self-Evolving Agents: For now, building effective agentic systems requires human effort and careful optimization tricks. However, one of the bigger opportunities in the field is to build AI that can itself build powerful and self-improving AI systems.

8. MAGI

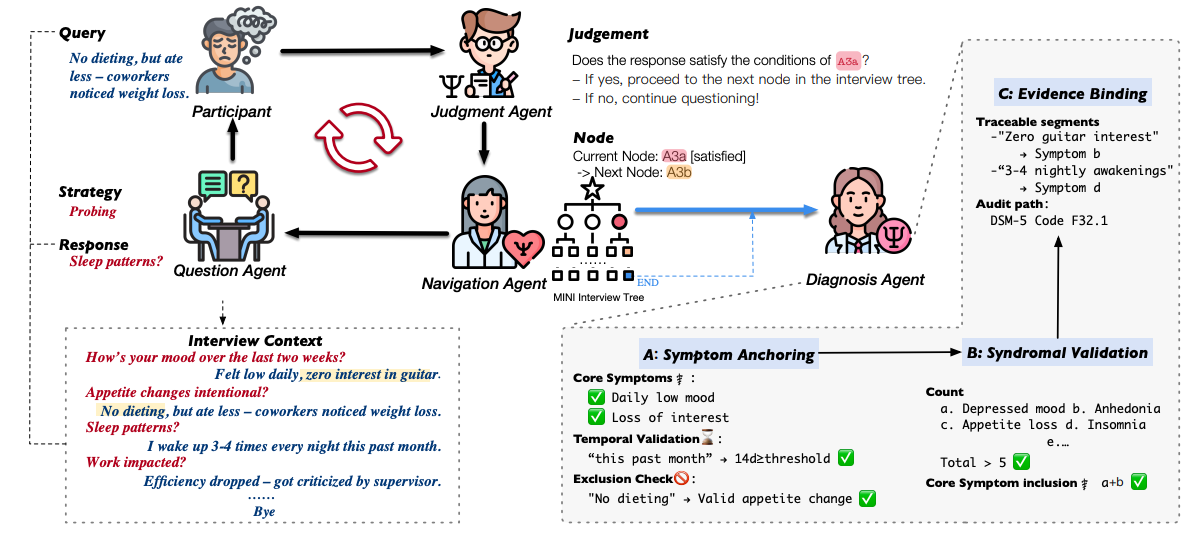

MAGI is a multi-agent system designed to automate structured psychiatric interviews by operationalizing the MINI (Mini International Neuropsychiatric Interview) protocol. It involves 4 specialized agents: navigation, question generation, judgment, and diagnosis. Other highlights:

Multi-Agent Clinical Workflow: MAGI is built with a navigation agent (interview flow control), a question agent (dynamic, empathetic probing), a judgment agent (response validation), and a diagnosis agent using Psychometric CoT to trace diagnoses explicitly to MINI/DSM-5 criteria.

Explainable Reasoning (PsyCoT): Instead of treating diagnoses as opaque outputs, PsyCoT decomposes psychiatric reasoning into symptom anchoring, syndromal validation, and evidence binding. This helps with auditability for each diagnostic conclusion. CoT put to great use.

Results: Evaluated on 1,002 real-world interviews, MAGI outperforms baselines (Direct prompting, Role-play, Knowledge-enhanced, and MINI-simulated LLMs) across relevance, accuracy, completeness, and guidance.

Strong Clinical Agreement: Diagnostic evaluations show PsyCoT consistently improves F1 scores, accuracy, and Cohen’s κ across disorders like depression, generalized anxiety, social anxiety, and suicide risk, reaching clinical-grade reliability (κ > 0.8) in high-risk tasks.

9. A Survey of Efficient LLM Inference Serving

This survey reviews recent advancements in optimizing LLM inference, addressing memory and computational bottlenecks. It covers instance-level techniques (like model placement and request scheduling), cluster-level strategies (like GPU deployment and load balancing), and emerging scenario-specific solutions, concluding with future research directions.

10. LLM for Engineering

This work finds that when RL is used, a 7B parameter model outperforms both SoTA foundation models and human experts at high-powered rocketry design.

A couple good ones. Shows a little growth, I guess? Do you ever feel like people are inching ever forward (which is awesome) but, what if there is that one person(s) that launches the tech forward so far, the inches seem insignificant comparatively? Would that have negative impacts on those working in academia and in business?