🥇Top AI Papers of the Week

The Top AI Papers of the Week (April 7 - April 13)

1). The AI Scientist V2

The AI Scientist-v2 refines and extends its predecessor to achieve a new milestone: autonomously generating a workshop-accepted research manuscript. The system removes dependencies on human-authored code templates, incorporates agentic tree-search methods for deeper exploration, uses Vision-Language Models to refine figures, and demonstrates impressive real-world outcomes by passing the peer-review bar.

Enhanced Autonomy – Eliminates reliance on human-crafted code templates, enabling out-of-the-box deployment across diverse ML domains.

Agentic Tree Search – Systematically searches and refines hypotheses through a branching exploration, managed by a new experiment manager agent.

VLM Feedback Loop – Integrates Vision-Language Models in the reviewing process to critique and improve experimental figures and paper aesthetics.

Workshop Acceptance – Generated three fully autonomous manuscripts for an ICLR workshop; one was accepted, showcasing the feasibility of AI-driven end-to-end scientific discovery.

2). Benchmarking Browsing Agents

OpenAI introduces BrowseComp, a benchmark with 1,266 questions that require AI agents to locate hard-to-find, entangled information on the web. Unlike saturated benchmarks like SimpleQA, BrowseComp demands persistent and creative search across numerous websites, offering a robust testbed for real-world web-browsing agents.

Key insights:

Extremely difficult questions: Benchmarked tasks were verified to be unsolvable by humans in under 10 minutes and also by GPT-4o (with/without browsing), OpenAI o1, and earlier Deep Research models.

Human performance is low: Only 29.2% of problems were solved by humans (even with 2-hour limits). 70.8% were abandoned.

Model performance:

GPT-4o: 0.6%

GPT-4o + browsing: 1.9%

OpenAI o1: 9.9%

Deep Research: 51.5%

Test-time scaling matters: Accuracy improves with more browsing attempts. With 64 parallel samples and best-of-N aggregation, Deep Research significantly boosts its performance (15–25% gain over a single attempt).

Reasoning > browsing: OpenAI o1 (no browsing but better reasoning) outperforms GPT-4.5 with browsing, showing that tool use alone isn't enough—strategic reasoning is key.

Calibration struggles: Models with browsing access often exhibit overconfidence in incorrect answers, revealing current limits in uncertainty estimation.

Dataset diversity: Includes a wide topical spread: TV/movies, science, art, sports, politics, geography, etc.

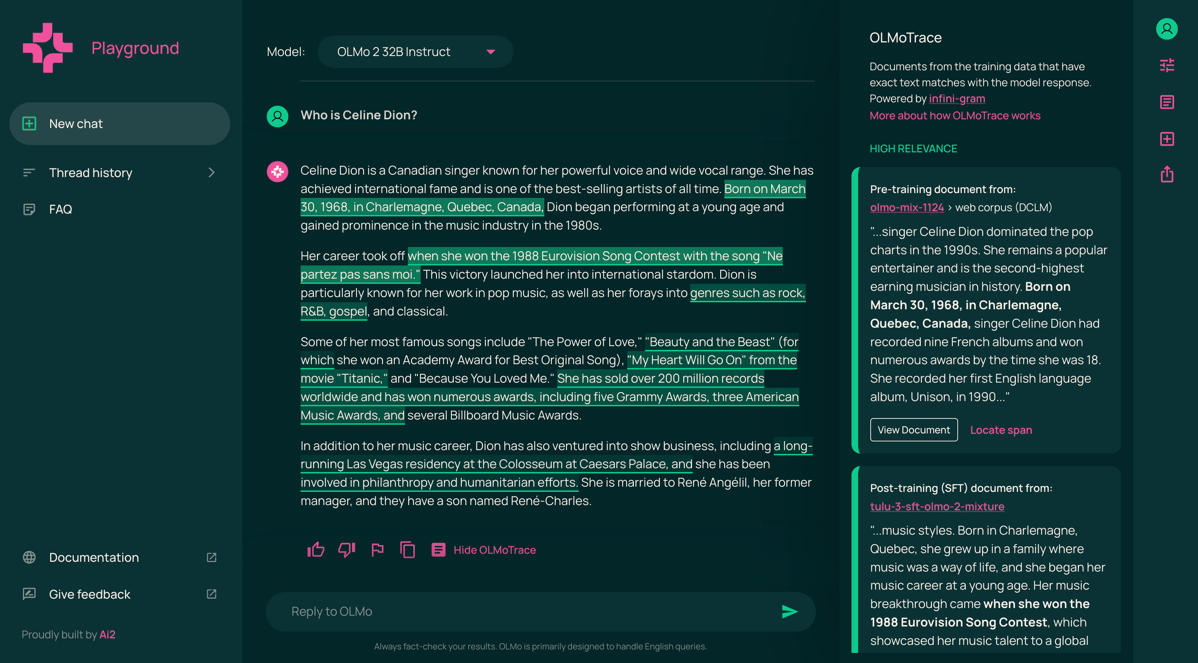

3). OLMOTrace

Allen Institute for AI & University of Washington present OLMOTRACE, a real-time system that traces LLM-generated text back to its verbatim sources in the original training data, even across multi-trillion-token corpora.

What it does: For a given LM output, OLMOTRACE highlights exact matches with training data segments and lets users inspect full documents for those matches. Think "reverse-engineering" a model’s response via lexical lookup.

How it works:

Built on an extended version of infini-gram, a text search engine indexing training data via suffix arrays stored on SSDs.

Computes maximal verbatim spans with a novel parallel algorithm that runs a single

findquery per suffix (instead of brute-force searching all spans).Prioritizes long and unique spans using unigram probability, filters overlaps, then reranks documents with BM25.

Achieves sub-5 second latency (avg 4.46s on 458-token outputs) on 4.6T-token corpora using 64 vCPUs and high-IOPS SSDs.

Supported models: Works with OLMo models (e.g., OLMo-2-32B-Instruct) and their full pre/mid/post-training datasets, totaling 4.6T tokens.

Use cases:

Fact-checking: See if factual outputs match trustworthy sources

Creativity audit: Check if original outputs were memorized

Math trace: Confirm if equations are copied from the train data

Benchmarked:

Relevance of top results scored via GPT-4o LLM-as-a-Judge and humans. Final setting: avg score 1.82/3 for 1st doc, 1.50/3 for top-5.

BM25 reranking, span uniqueness filtering, and improved caching boosted both quality and speed.

Not RAG: It retrieves after generation, without changing output, unlike retrieval-augmented generation.

4). Concise Reasoning via RL

This new paper proposes a new training strategy that promotes concise and accurate reasoning in LLMs using RL. It challenges the belief that long responses improve accuracy; it offers both theoretical and empirical evidence showing that conciseness often correlates with better performance.

Long ≠ better reasoning – The authors mathematically show that RL with PPO tends to generate unnecessarily long responses, especially when answers are wrong. Surprisingly, shorter outputs correlate more with correct answers, across reasoning and non-reasoning models.

Two-phase RL for reasoning + conciseness – They introduce a two-phase RL strategy: (1) train on hard problems to build reasoning ability (length may increase), then (2) fine-tune on occasionally solvable tasks to enforce concise CoT without hurting accuracy. The second phase alone dramatically reduces token usage by over 50%, with no loss in accuracy.

Works with tiny data – Their method succeeds with as few as 4–8 training examples, showing large gains in both math and STEM benchmarks (MATH, AIME24, MMLU-STEM). For instance, on MMLU-STEM, they improved accuracy by +12.5% while cutting response length by over 2×.

Better under low sampling – Post-trained models remain robust even when the temperature is reduced to 0. At temperature=0, the fine-tuned model outperformed the baseline by 10–30%, showing enhanced deterministic performance.

Practical implications – Besides improving model output, their method reduces latency, cost, and token usage, making LLMs more deployable. The authors also recommend setting λ < 1 during PPO to avoid instability and encourage correct response shaping.

5). Rethinking Reflection in Pre-Training

Reflection — the ability of LLMs to identify and correct their own reasoning — has often been attributed to reinforcement learning or fine-tuning. This paper argues otherwise: reflection emerges during pre-training. The authors introduce adversarial reasoning tasks to show that self-reflection and correction capabilities steadily improve as compute increases, even in the absence of supervised post-training.

Key contributions:

Propose two kinds of reflection:

Situational-reflection: The model corrects errors in others’ reasoning.

Self-reflection: The model corrects its own prior reasoning.

Build six adversarial datasets (GSM8K, TriviaQA, CruxEval, BBH) to test reflection across math, coding, logic, and knowledge domains. On GSM8K-Platinum, explicit reflection rates grow from ~10% to >60% with increasing pre-training tokens.

Demonstrate that simple triggers like “Wait,” reliably induce reflection.

Evaluate 40 OLMo-2 and Qwen2.5 checkpoints, finding a strong correlation between pre-training compute and both accuracy and reflection rate.

Why it matters:

Reflection is a precursor to reasoning and can develop before RLHF or test-time decoding strategies.

Implication: We can instill advanced reasoning traits with better pre-training data and scale, rather than relying entirely on post-training tricks.

They also show a trade-off: more training compute reduces the need for expensive test-time compute like long CoT traces.

6). Efficient KG Reasoning for Small LLMs

LightPROF is a lightweight framework that enables small-scale language models to perform complex reasoning over knowledge graphs (KGs) using structured prompts. Key highlights:

Retrieve-Embed-Reason pipeline – LightPROF introduces a three-stage architecture:

Retrieve: Uses semantic-aware anchor entities and relation paths to stably extract compact reasoning graphs from large KGs.

Embed: A novel Knowledge Adapter encodes both the textual and structural information from the reasoning graph into LLM-friendly embeddings.

Reason: These embeddings are mapped into soft prompts, which are injected into chat-style hard prompts to guide LLM inference without updating the LLM itself.

Plug-and-play & parameter-efficient – LightPROF trains only the adapter and projection modules, allowing seamless integration with any open-source LLM (e.g., LLaMa2-7B, LLaMa3-8B) without expensive fine-tuning.

Outperforms larger models – Despite using small LLMs, LightPROF beats baselines like StructGPT (ChatGPT) and ToG (LLaMa2-70B) on KGQA tasks: 83.8% (vs. 72.6%) on WebQSP and 59.3% (vs. 57.6%)on CWQ.

Extreme efficiency – Compared to StructGPT, LightPROF reduces token input by 98% and runtime by 30%, while maintaining accuracy and stable output even in complex multi-hop questions.

Ablation insights – Removing structural signals or training steps severely degrades performance, confirming the critical role of the Knowledge Adapter and retrieval strategy.

7). Compute Agent Arena

Computer Agent Arena is a new open platform for benchmarking LLM and VLM-based agents on real-world computer-use tasks, like coding, editing, and web navigation, using a virtual desktop environment. Initial results show that OpenAI and Anthropic are leading with modest success rates, while the platform aims to grow through crowdsourced tasks, agent submissions, and open-sourcing of its infrastructure.

8). Agentic Knowledgeable Self-awareness

KnowSelf is a new framework that introduces agentic knowledgeable self-awareness, enabling LLM agents to dynamically decide when to reflect or seek knowledge based on situational complexity, mimicking human cognition. Using special tokens for "fast," "slow," and "knowledgeable" thinking, KnowSelf reduces inference costs and achieves state-of-the-art performance on ALFWorld and WebShop tasks with minimal external knowledge.

9). One-Minute Video Generation with Test-Time Training

One-Minute Video Generation with Test-Time Training introduces TTT layers, a novel sequence modeling component where hidden states are neural networks updated via self-supervised loss at test time. By integrating these into a pre-trained diffusion model, the authors enable single-shot generation of one-minute, multi-scene videos from storyboards, achieving 34 Elo points higher than strong baselines like Mamba 2 and DeltaNet in human evaluations

10). NoProp

NoProp is a novel gradient-free learning method where each neural network layer independently learns to denoise a noisy version of the target, inspired by diffusion and flow matching. Unlike backpropagation, it avoids hierarchical representation learning and achieves competitive performance and efficiency on image classification benchmarks like MNIST and CIFAR.