🥇Top AI Papers of the Week

The Top AI Papers of the Week (February 9-15)

1. ALMA

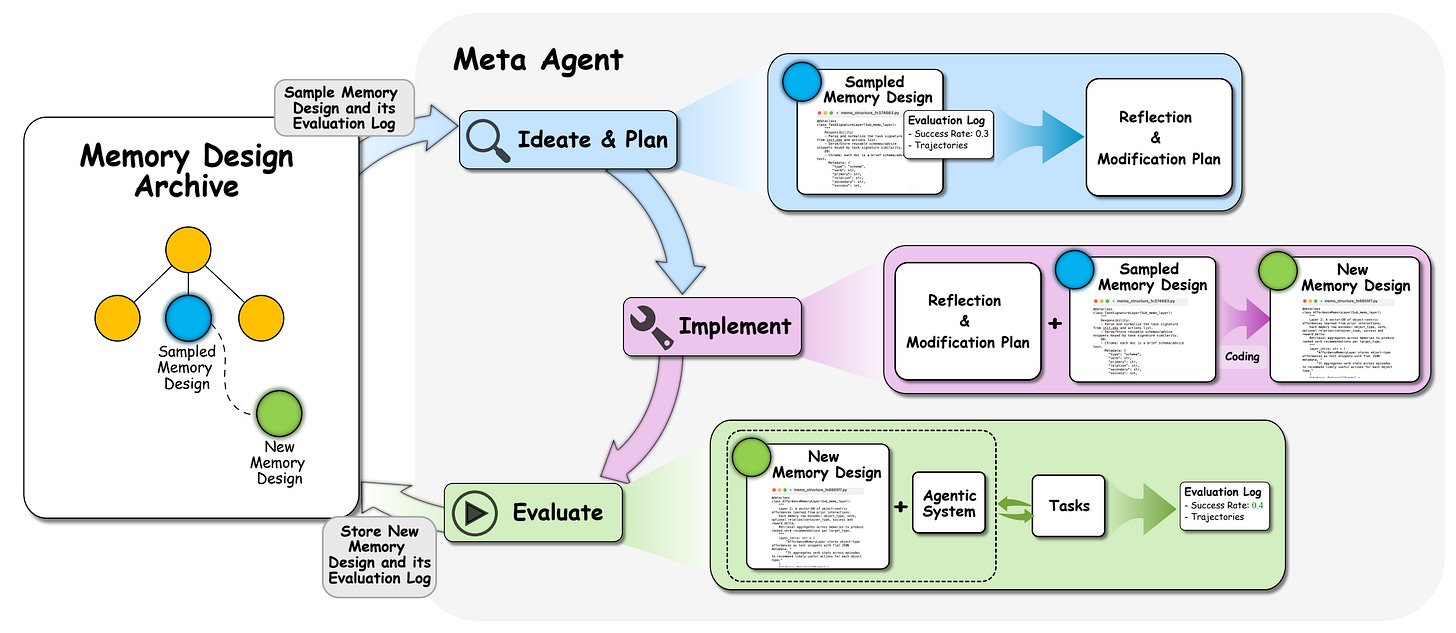

ALMA (Automated meta-Learning of Memory designs for Agentic systems) from Jeff Clune’s group introduces a Meta Agent that automatically discovers memory designs for agentic systems through open-ended exploration in code space. Instead of relying on hand-engineered memory modules, ALMA searches over database schemas, retrieval mechanisms, and update strategies expressed as executable code, consistently outperforming all human-designed memory baselines across four sequential decision-making benchmarks.

Open-ended code search: A Meta Agent samples previously explored memory designs from an archive, reflects on their code and evaluation logs, proposes new designs, and implements them as executable code. This gives ALMA the theoretical potential to discover arbitrary memory architectures, from graph databases to strategy libraries, unconstrained by human design intuitions.

Domain-adaptive memory discovery: ALMA discovers fundamentally different memory structures for different domains: affordance graphs for ALFWorld, task signature databases for TextWorld, strategy libraries with rule prediction for Baba Is AI, and risk-interaction schemas for MiniHack. This specialization emerges automatically from the search process.

Consistent gains over human baselines: Learned memory designs achieve 12.3% average success rate with GPT-5-nano (vs 8.6% for the best human baseline) and 53.9% with GPT-5-mini (vs 48.6%). The designs also scale better with more collected experience and transfer robustly across different foundation models.

Toward self-improving agentic systems: ALMA represents a step toward AI systems that learn to be continual learners. The progressive discovery process shows that moderate-performing designs serve as stepping stones toward optimal solutions, with the archive enabling cumulative innovation across exploration iterations.

2. LLaDA 2.1

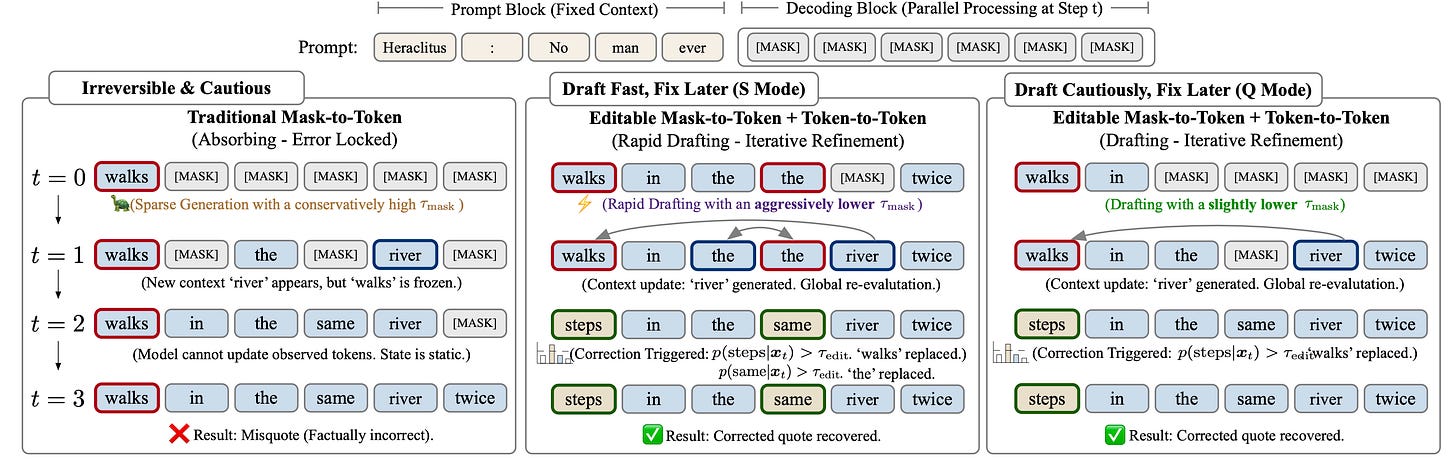

Ant Group releases LLaDA 2.1, a major upgrade to discrete diffusion language models that breaks the speed-quality trade-off through Token-to-Token (T2T) editing. By weaving token editing into the conventional Mask-to-Token decoding scheme, LLaDA 2.1 introduces two configurable modes: Speedy Mode for aggressive throughput and Quality Mode for benchmark-leading accuracy. The release also includes the first large-scale RL framework for diffusion LLMs.

Editable state evolution: Unlike standard diffusion models that only unmask tokens, LLaDA 2.1 can also edit already-generated tokens. This dual action space (unmasking + correction) lets the model aggressively draft with low-confidence thresholds and then refine errors in subsequent passes, fundamentally changing the speed-quality trade-off.

Two operating modes: Speedy Mode lowers the mask-to-token threshold for maximum throughput, relying on T2T passes to fix errors. Quality Mode uses conservative thresholds for superior benchmark scores. This gives practitioners a configurable knob between speed and accuracy without swapping models.

Extreme decoding speed: LLaDA 2.1-Flash (100B) hits 892 tokens per second on HumanEval+ and 801 TPS on BigCodeBench. The Mini variant (16B) reaches a peak of 1,587 TPS. These speeds dramatically outpace autoregressive models of comparable quality.

First RL for diffusion LLMs: The paper introduces EBPO (Evidence-Based Policy Optimization), an RL framework that uses block-causal masking and parallel likelihood estimation to enable stable policy optimization at scale for diffusion models. RL training sharpens reasoning and instruction-following across 33 benchmarks.

Message from the Editor

Excited to announce our new cohort-based training on Claude Code for Everyone. Learn how to leverage Claude Code features to vibecode production-grade AI-powered apps.

Seats are limited for this cohort. Grab your early bird spot now.

3. SkillRL

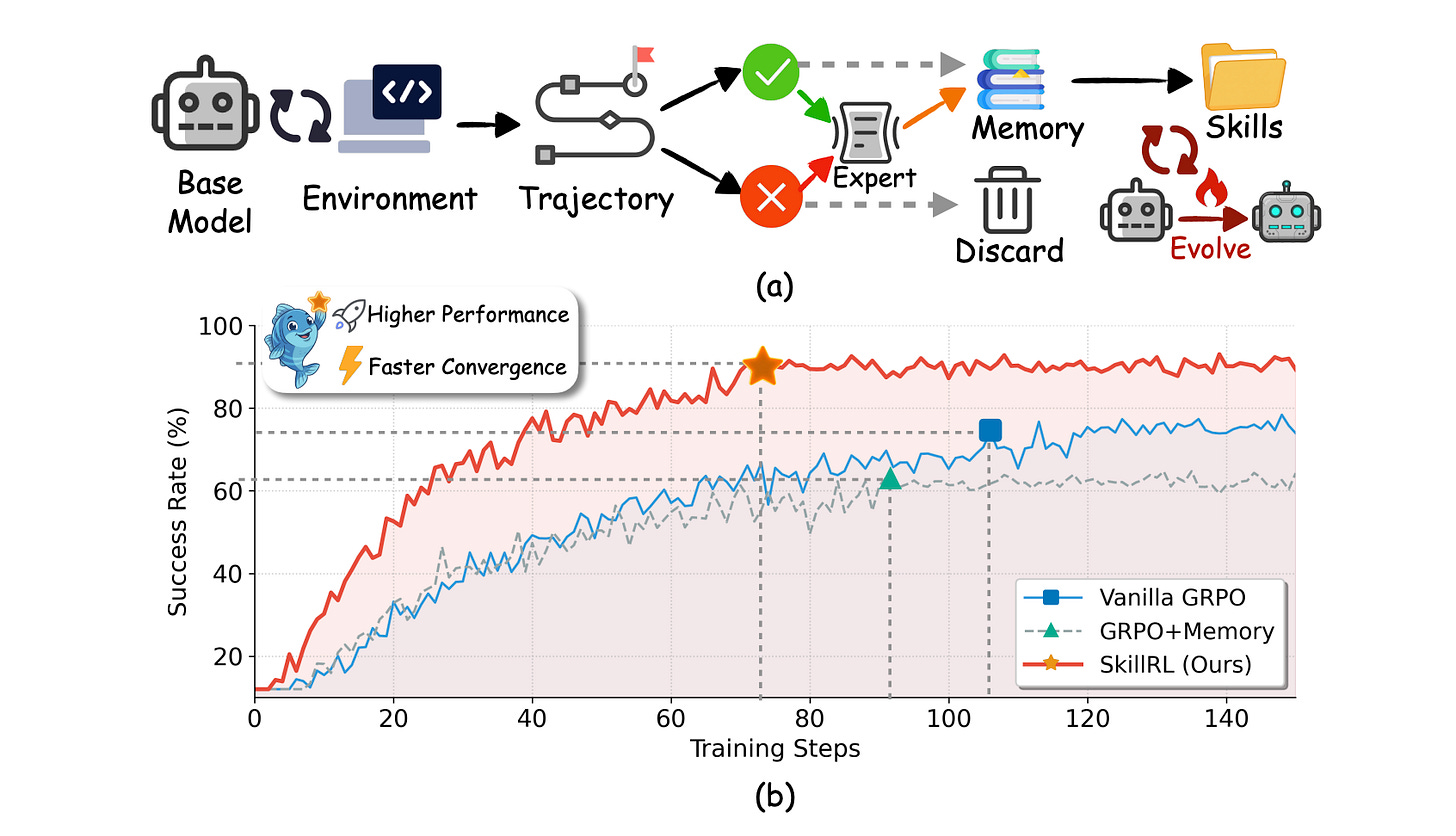

SkillRL introduces a recursive skill-augmented RL framework that bridges the gap between raw experience and policy improvement through automatic skill discovery. Instead of storing noisy raw trajectories, SkillRL distills experience into reusable high-level behavioral patterns and evolves them alongside the agent policy during training.

Hierarchical skill library (SkillBank): An experience-based distillation mechanism extracts reusable behavioral patterns from raw trajectories and organizes them into a hierarchical skill library. This dramatically reduces the token footprint while preserving the reasoning utility needed for complex multi-step tasks.

Adaptive skill retrieval: A dual retrieval strategy combines general heuristics with task-specific skills, selecting the most relevant behavioral patterns based on the current task context. This enables the agent to leverage accumulated knowledge without being overwhelmed by irrelevant experience.

Recursive co-evolution: The skill library and agent policy evolve together during RL training. As the agent encounters harder tasks, new skills are extracted, and existing ones are refined, creating a virtuous cycle where better skills enable better performance, which generates better training data for skill extraction.

Strong empirical results: SkillRL achieves state-of-the-art performance with 89.9% success rate on ALFWorld, 72.7% on WebShop, and an average of 47.1% on search-augmented QA tasks, outperforming strong baselines by over 15.3% while maintaining robustness as task complexity increases.

4. InftyThink+

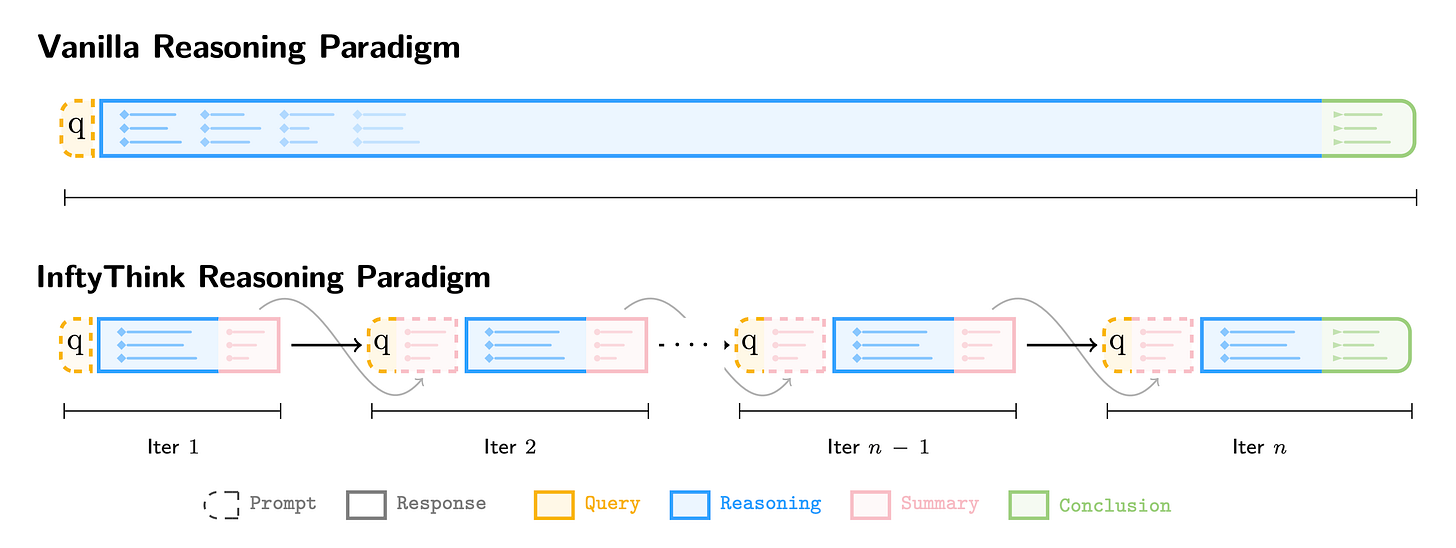

InftyThink+ is an end-to-end RL framework for infinite-horizon reasoning that optimizes the entire iterative reasoning trajectory. Standard long chain-of-thought suffers from quadratic cost, context length limits, and lost-in-the-middle degradation. InftyThink+ addresses all three by letting models autonomously decide when to summarize, what to preserve, and how to resume, trained through trajectory-level reinforcement learning.

Iterative reasoning with learned boundaries: Instead of generating one continuous chain-of-thought, InftyThink+ decomposes reasoning into multiple iterations connected by self-generated summaries. The model learns to control iteration boundaries, deciding when to compress and continue rather than following fixed heuristics or chunk sizes.

Two-stage training recipe: A supervised cold-start teaches the InftyThink format (special tokens for summary and history), then trajectory-level GRPO optimizes the full multi-iteration rollout. Advantages are shared across all iterations within a trajectory, so early high-quality summaries that enable correct later reasoning receive a positive gradient signal.

21% accuracy gain on AIME24: On DeepSeek-R1-Distill-Qwen-1.5B, InftyThink+ with RL improves accuracy from 29.5% to 50.9% on AIME24, a 21-point jump that substantially outperforms vanilla long-CoT RL (38.8%). Results generalize to out-of-distribution benchmarks, including GPQA Diamond and AIME25.

Faster inference, faster training: By bounding context length per iteration, InftyThink+ reduces inference latency compared to vanilla reasoning while achieving higher accuracy. Adding an efficiency reward further cuts token usage by 50% with only a modest accuracy trade-off, demonstrating a controllable speed-accuracy knob.

5. Agyn

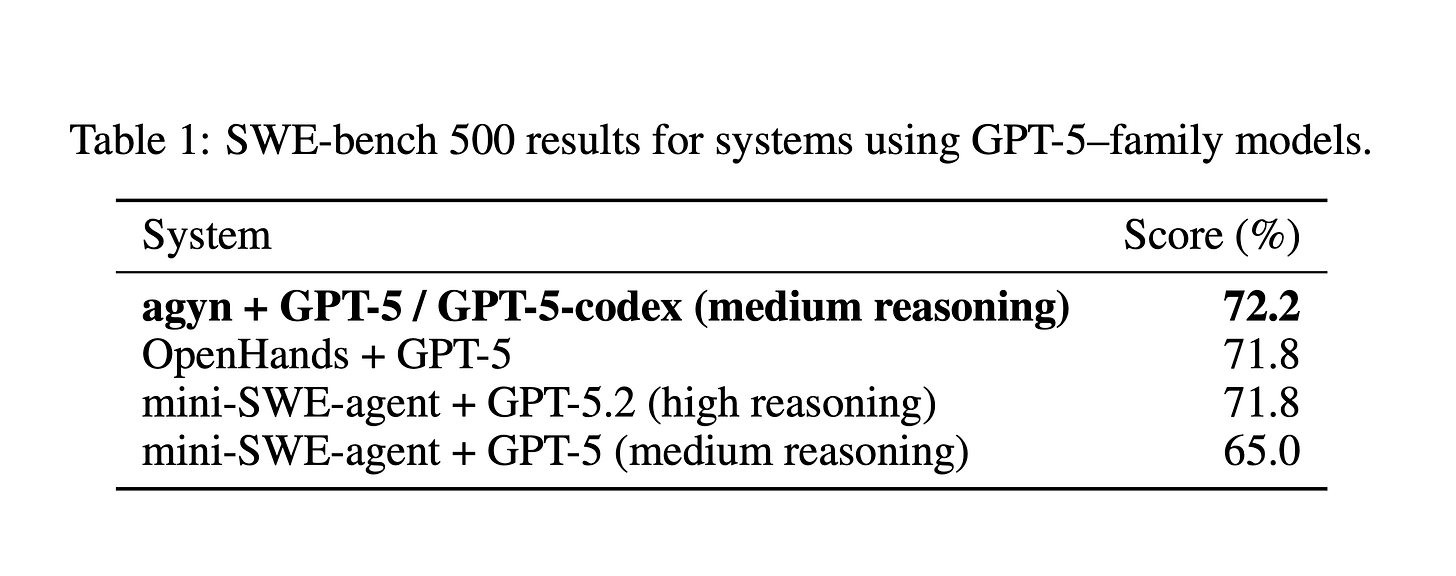

Agyn is a fully automated multi-agent system that models software engineering as an organizational process rather than a monolithic code generation task. Built on an open-source platform for configuring agent teams, the system assigns specialized agents to distinct roles and follows a structured development methodology - all without human intervention. Notably, Agyn was designed for real production use and was not tuned for the SWE-bench.

Team-based architecture: Four specialized agents (manager, researcher, engineer, reviewer) operate with distinct responsibilities, tools, and model configurations. The manager coordinates using a high-level methodology inspired by real development practice, while the engineer and reviewer work through GitHub-native pull requests and inline code reviews.

Role-specific model routing: Reasoning-heavy agents like the manager and researcher use larger general-purpose models, while implementation agents use smaller, code-specialized models. This mirrors real team structure, where different roles need different capabilities, and reduces overall cost without sacrificing quality.

Dynamic workflow, not a fixed pipeline: Unlike prior multi-agent SWE systems that encode a predetermined number of stages, Agyn’s coordination evolves dynamically. The manager decides when additional research, specification refinement, implementation, or review cycles are needed based on intermediate outcomes, enabling flexible iteration.

Strong benchmark performance without tuning: Agyn resolves 72.2% of tasks on SWE-bench 500, outperforming single-agent baselines by 7.4% under comparable model configurations. The key insight is that organizational design and agent infrastructure may matter as much as model improvements for autonomous software engineering.

6. EchoJEPA

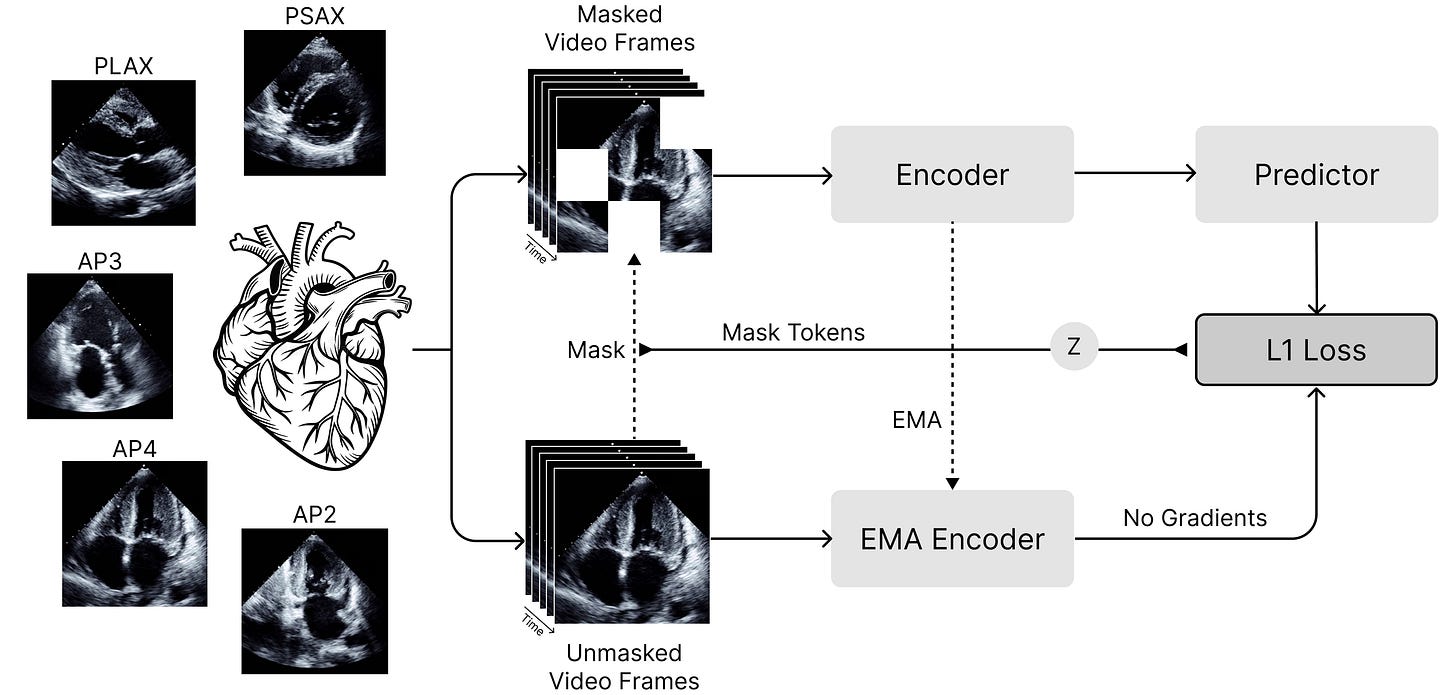

EchoJEPA is a latent predictive foundation model for echocardiography trained on 18 million echocardiograms from 300,000 patients. By learning to predict in latent space rather than pixel space, the model separates clinically meaningful anatomical signals from ultrasound noise and artifacts, producing representations that dramatically outperform existing approaches on cardiac assessment tasks.

Massive scale and latent prediction: Trained on 18 million echocardiograms using a JEPA-style objective that predicts masked spatiotemporal regions in latent space. This approach learns to ignore speckle noise and acoustic artifacts that plague pixel-level methods, producing representations focused on anatomically meaningful features.

Strong improvements on clinical tasks: EchoJEPA improves left ventricular ejection fraction estimation by approximately 20% and right ventricular systolic pressure estimation by approximately 17% over leading baselines. For view classification, it reaches 79% accuracy using only 1% of labeled data, while the best baseline achieves just 42% with the full labeled dataset.

Exceptional robustness: Under acoustic perturbations that degrade competitor models by 17%, EchoJEPA degrades only 2%. This robustness extends to population shift: zero-shot performance on pediatric patients exceeds fully fine-tuned baseline models, demonstrating genuine generalization rather than memorization.

Clinical foundation model potential: The combination of scale, label efficiency, and robustness across patient populations positions EchoJEPA as a practical foundation for clinical echocardiography applications where labeled data is scarce and acoustic conditions vary widely.

7. AdaptEvolve

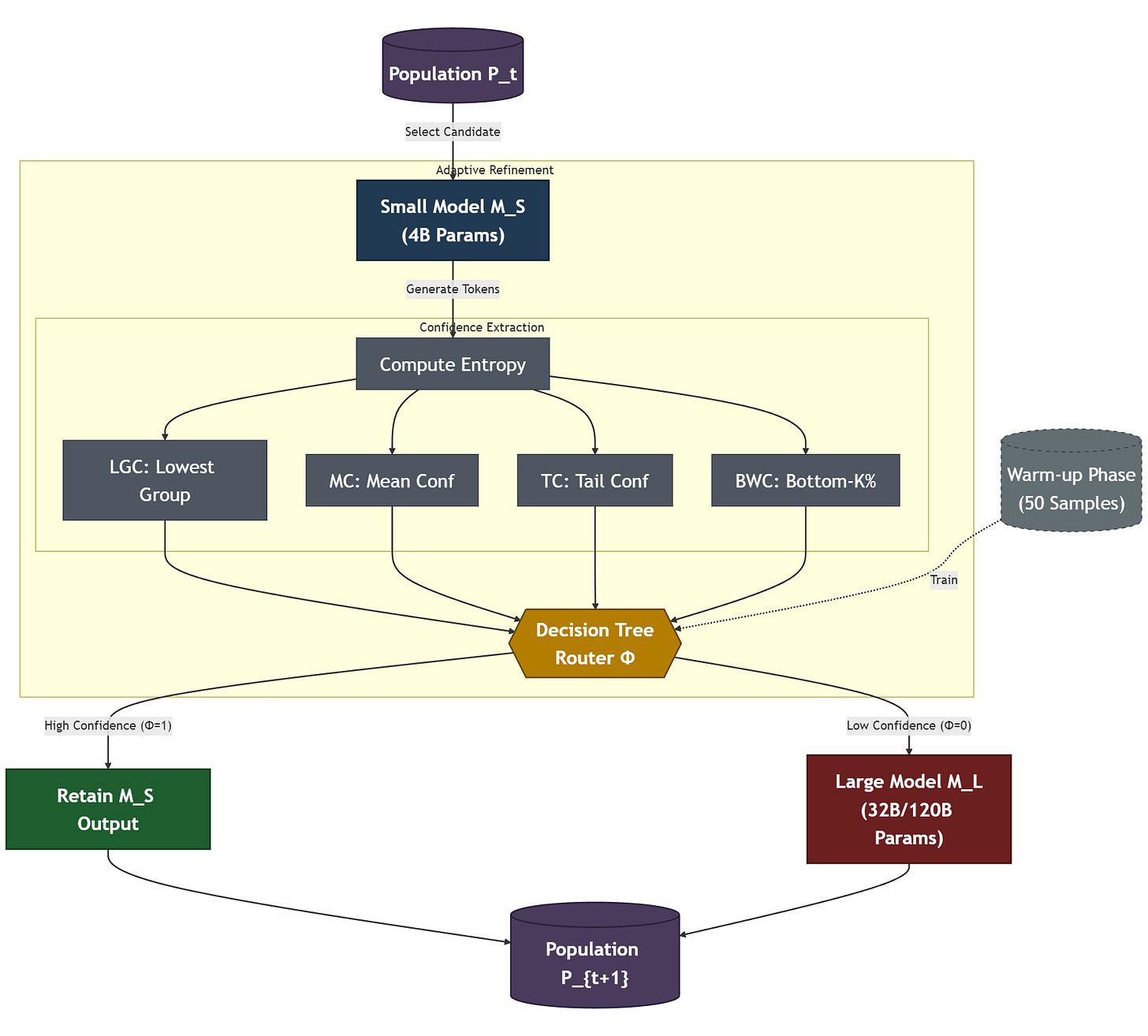

AdaptEvolve tackles a key efficiency bottleneck in evolutionary agentic systems: the repeated invocation of large LLMs during iterative refinement loops. The method uses intrinsic generation confidence to dynamically select which model to invoke at each step, routing easy sub-problems to smaller models and reserving expensive frontier models for genuinely hard decisions.

Confidence-driven model routing: Instead of static heuristics or external controllers, AdaptEvolve monitors real-time generation confidence scores to estimate task solvability at each evolutionary step. When the smaller model is confident, it proceeds without escalation; when uncertainty is high, the system routes to a larger, more capable model.

Favorable cost-accuracy trade-off: Across benchmarks, AdaptEvolve cuts inference costs by approximately 38% while retaining roughly 97.5% of the upper-bound accuracy achieved by always using the largest model. This creates a Pareto-optimal frontier that static single-model or naive cascade approaches cannot match.

Practical for deployed agent loops: Evolutionary and iterative refinement workflows often require dozens of LLM calls per task. Reducing per-call cost by nearly 40% without meaningful accuracy loss makes these workflows viable for production deployment, where cost compounds rapidly.

Generalizable routing signal: The confidence-based selection mechanism is model-agnostic and does not require task-specific tuning, making it applicable across different evolutionary agent architectures and domain-specific refinement pipelines.

8. Gaia2

Meta FAIR introduces Gaia2, a next-generation agent benchmark where environments change independently of agent actions, forcing agents to handle temporal pressure, uncertainty, and multi-agent coordination. GPT-5 leads at 42% pass@1 but struggles with time-constrained tasks, while Kimi-K2 leads open-source models at 21%. Built on the open-source Agents Research Environments (ARE) platform with action-level verifiers, Gaia2 represents a paradigm shift from static benchmarks to dynamic evaluation of agentic capabilities.

9. AgentArk

AgentArk distills multi-agent debate dynamics into a single LLM, transferring the reasoning and self-correction abilities of multi-agent systems into one model at training time. Three hierarchical distillation strategies (reasoning-enhanced SFT, trajectory-based augmentation, and process-aware distillation with a process reward model) yield an average 4.8% improvement over single-agent baselines across math and reasoning benchmarks, approaching full multi-agent performance at a fraction of the inference cost. Cross-family distillation (e.g., Qwen3-32B to LLaMA-3-8B) produces the largest gains, suggesting heterogeneous architectures benefit most from transferred reasoning signals.

10. AgentSkiller

AgentSkiller scales generalist agent intelligence through semantically integrated cross-domain data synthesis, producing 11K high-quality synthetic trajectories across diverse tool-use scenarios. The resulting 14B model beats GPT-o3 on tau2-bench (79.1% vs 68.4%), and even the 4B variant outperforms 70B and 235B models, demonstrating that data quality and semantic integration matter more than parameter count for building strong tool-use agents.