LLMs Get Lost in Multi-turn Conversation

Main reasons LLMs get "lost" in multi-turn conversations and mitigation strategies

The cat is out of the bag: LLMs get lost in multi-turn conversations.

Pay attention, AI developers.

This is one of the most common issues when building with LLMs today.

Glad there is now paper to share insights.

Here are my notes from the paper:

What the Paper Presents

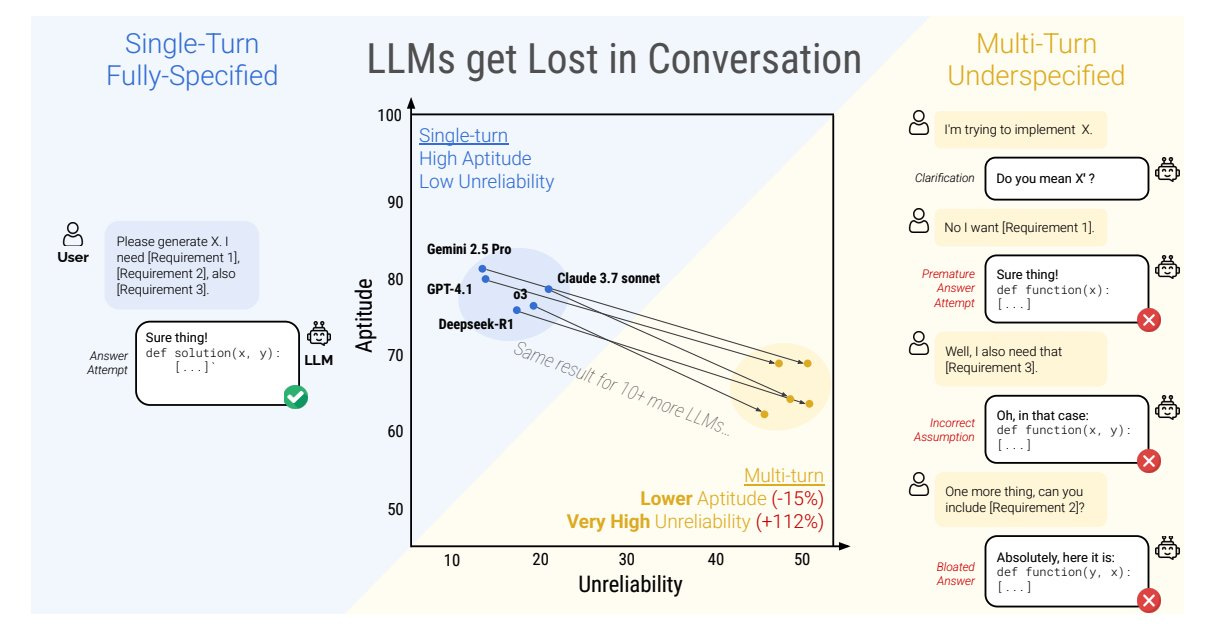

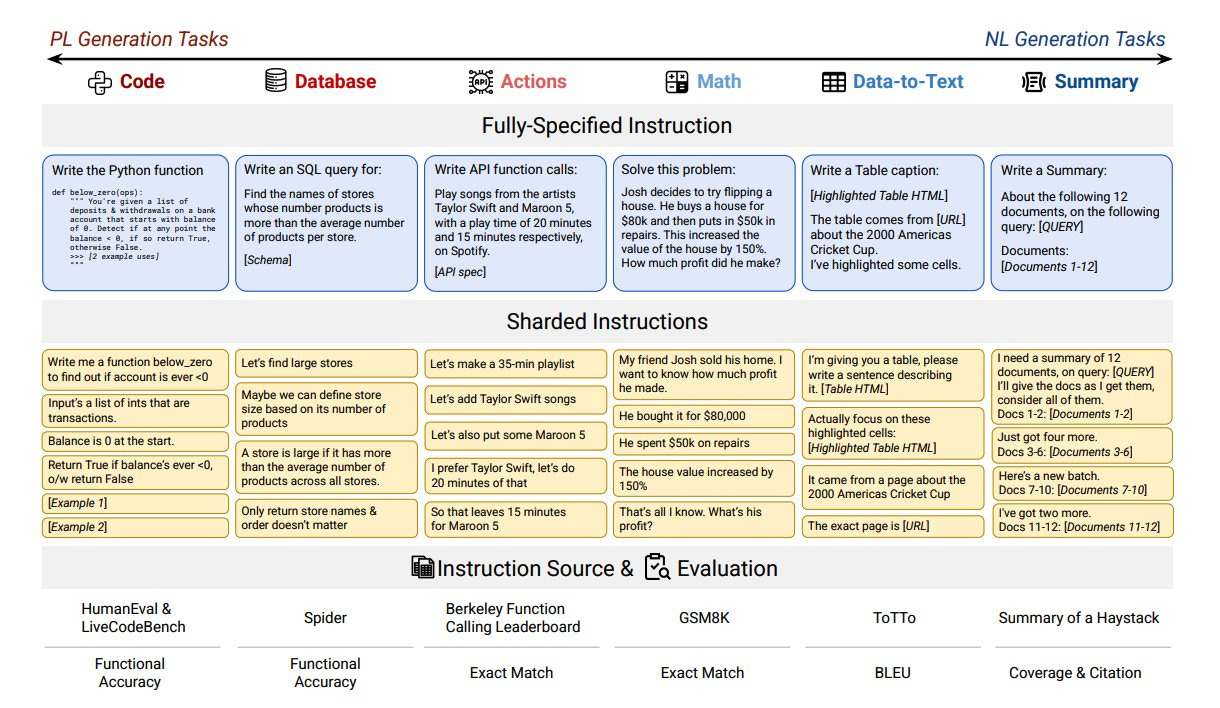

The paper investigates how LLMs perform in realistic, multi-turn conversational settings where user instructions are often underspecified and clarified over several turns. I keep telling devs to spend time preparing those initial instructions. Prompt engineering is important.

The paper investigates how LLMs perform in realistic, multi-turn conversational settings where user instructions are often underspecified and clarified over several turns. I keep telling devs to spend time preparing those initial instructions. Prompt engineering is important.

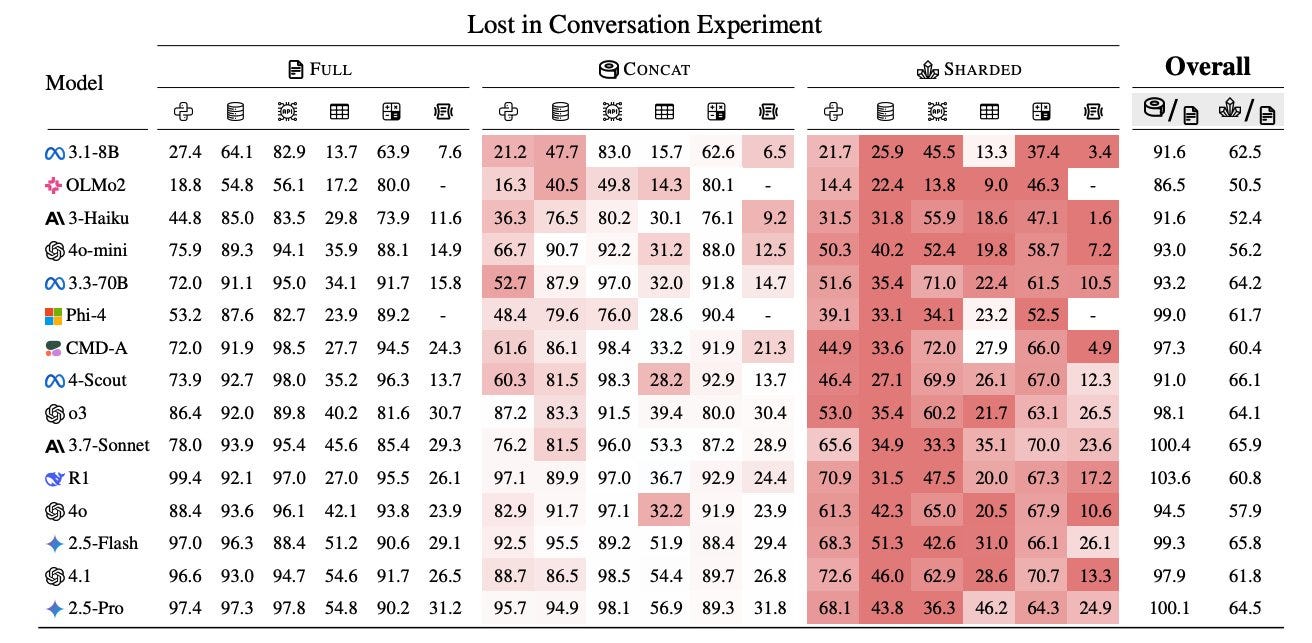

The authors conduct large-scale simulations across 15 top LLMs (including GPT-4.1, Gemini 2.5 Pro, Claude 3.7 Sonnet, Deepseek-R1, and others) over six generation tasks (code, math, SQL, API calls, data-to-text, and document summarization).

Severe Performance Drop in Multi-Turn Settings

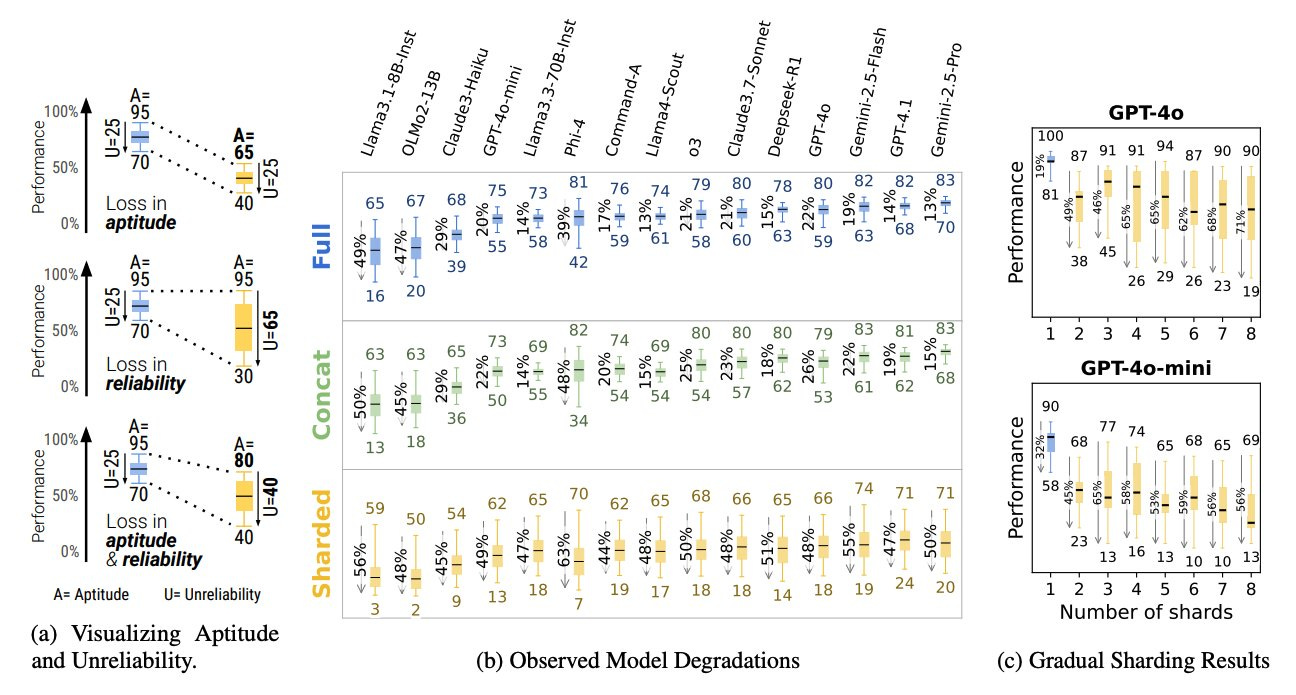

All tested LLMs show significantly worse performance in multi-turn, underspecified conversations compared to single-turn, fully-specified instructions. The average performance drop is 39% across six tasks, even for SoTA models.

For example, models with >90% accuracy in single-turn settings often drop to ~60% in multi-turn settings.

Degradation Is Due to Unreliability, Not Just Aptitude

The performance loss decomposes into a modest decrease in best-case capability (aptitude, -15%) and a dramatic increase in unreliability (+112%).

In multi-turn settings, the gap between the best and worst response widens substantially, meaning LLMs become much less consistent and predictable.

High-performing models in single-turn settings are just as unreliable as smaller models in multi-turn dialogues. Don't ignore testing and evaluating in multi-turn settings.

Main reasons LLMs get "lost"

Make premature and often incorrect assumptions early in the conversation. - Attempt full solutions before having all necessary information, leading to “bloated” or off-target answers.

Over-rely on their previous (possibly incorrect) answers, compounding errors as the conversation progresses.

Produce overly verbose outputs, which can further muddle context and confuse subsequent turns.

Pay disproportionate attention to the first and last turns, neglecting information revealed in the middle turns (“loss-in-the-middle” effect).

Task and Model Agnostic

The effect is robust across model size, provider, and task type (except for truly episodic tasks, like translation, where multi-turn does not introduce ambiguity).

Even extra test-time reasoning (as in "reasoning models" like o3, Deepseek-R1) does not mitigate the degradation. I've seen this.

I was talking about this in our office hour today, based on observations using Deep Research, which is built with reasoning models.

Agentic and System-Level Fixes Are Only Partially Effective

Recap and “snowball” strategies (where the system repeats all previous user instructions in each turn) partially reduce the performance drop, but don’t fully restore single-turn reliability.

Lowering generation randomness (temperature) also has a limited effect; unreliability persists even at T=0.

Good context management and memory solutions play an important role here.

Practical Recommendations

Users are better off consolidating all requirements into a single prompt rather than clarifying over multiple turns.

If a conversation goes off-track, starting a new session with a consolidated summary leads to better outcomes.

System builders and model developers are urged to prioritize reliability in multi-turn contexts, not just raw capability. This is especially true if you are building complex agentic systems where the impact of these issues is more prevalent.

LLMs are really weird. And all this weirdness is creeping up into the latest models too, but in more subtle ways. Be careful out there, devs.

More insights and paper here: https://arxiv.org/abs/2505.06120

I am going to go over this paper and what it means for devs building with LLMs and agentic systems. It will be available to our academy members here: https://dair-ai.thinkific.com

Your AI Coding Assistant Has Amnesia. Here's How to Fix It.

The goldfish memory problem is costing developers hours every week. You've been there. You spent 30 minutes explaining your project architecture to Claude. You walked it through your authentication flow, your database schema, your coding conventions. It gave you perfect code. The next day, you start a new session. "Can you help me add a new endpoint?" "I'd be happy to help! Could you tell me about your project structure and what frameworks you're using?" Gone. All of it. Every decision, every pattern, every preference — wiped clean.

The Hidden Cost of AI Amnesia

I started tracking how much time I spent re-explaining context to AI tools:

Monday: 12 minutes explaining we use Prisma, not Drizzle

Tuesday: 8 minutes re-describing the error handling pattern

Wednesday: 15 minutes walking through the auth flow again

Thursday: 10 minutes explaining why we chose that folder structure

45 minutes in one week. Just on context that the AI already "knew" — and forgot. Multiply that across a team of 5 developers. That's nearly 4 hours per week of pure waste.

What If Your AI Actually Remembered?

This is why I built VasperaMemory — a persistent memory layer for AI coding assistants. Here's how it works:

npx vasperamemory connect

That's it. One command. VasperaMemory automatically:

Indexes your codebase — functions, classes, relationships

Captures decisions — every architectural choice, every pattern

Learns your preferences — code style, naming conventions, what you reject

Syncs across tools — Claude, Cursor, Windsurf, Copilot all share the same memory

The next time you ask your AI about authentication, it already knows:

"Based on your project's auth patterns, you're using JWT with refresh tokens stored in httpOnly cookies. Your auth middleware is in src/middleware/auth.ts. Last week you decided against using Passport.js because of the overhead. Here's code that follows your conventions..."

The Technical Magic

Under the hood, VasperaMemory uses:

Graph-augmented retrieval — not just keyword matching, but understanding relationships between code entities

Temporal scoring — recent decisions weighted higher than old ones

Entity extraction — automatically maps functions, classes, and their dependencies

Cross-tool sync — memories captured in Cursor are available in Claude Code

It's not just a vector database. It's a knowledge graph that evolves with your codebase.

What Developers Are Saying

"I onboarded a new dev last week. Instead of 3 days of context dumping, I pointed them to VasperaMemory. Their AI already knew everything about the project."

"Finally, Claude remembers that I hate semicolons."

"The error fix memory alone has saved me hours. It remembers how we fixed that weird Prisma connection issue 2 months ago."

Free to Start

VasperaMemory is free for individual developers. No credit card. No trial period. Just connect and start building. Team features (shared memories, role-based access, onboarding mode) are coming soon. → https://vasperamemory.com/

Your AI will never forget again.

Great post, and spot on. Except, then it's not really conversational, is it?