Deep Agents

On the future of AI Agents.

Most agents today are shallow.

They easily fall apart on long, multi-step problems (e.g., deep research or agentic coding).

That’s changing fast!

We’re entering the era of “Deep Agents”, AI systems that strategically plan, remember, and delegate intelligently for solving very complex problems.

We at the DAIR.AI Academy, along with individuals from LangChain, Claude Code, and, more recently, Philipp Schmid, have been documenting this idea.

Here’s roughly the core idea behind Deep Agents (based on my own thoughts and notes that I’ve gathered from others):

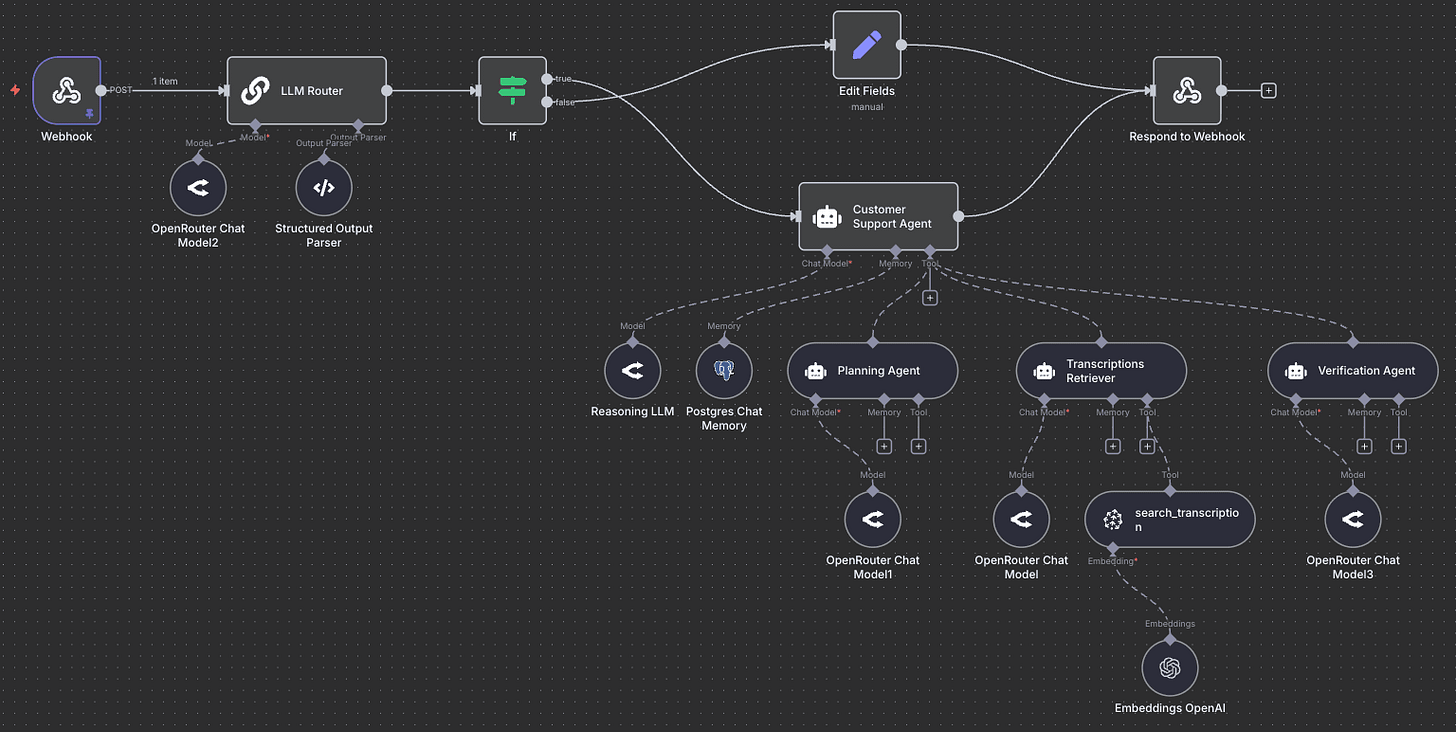

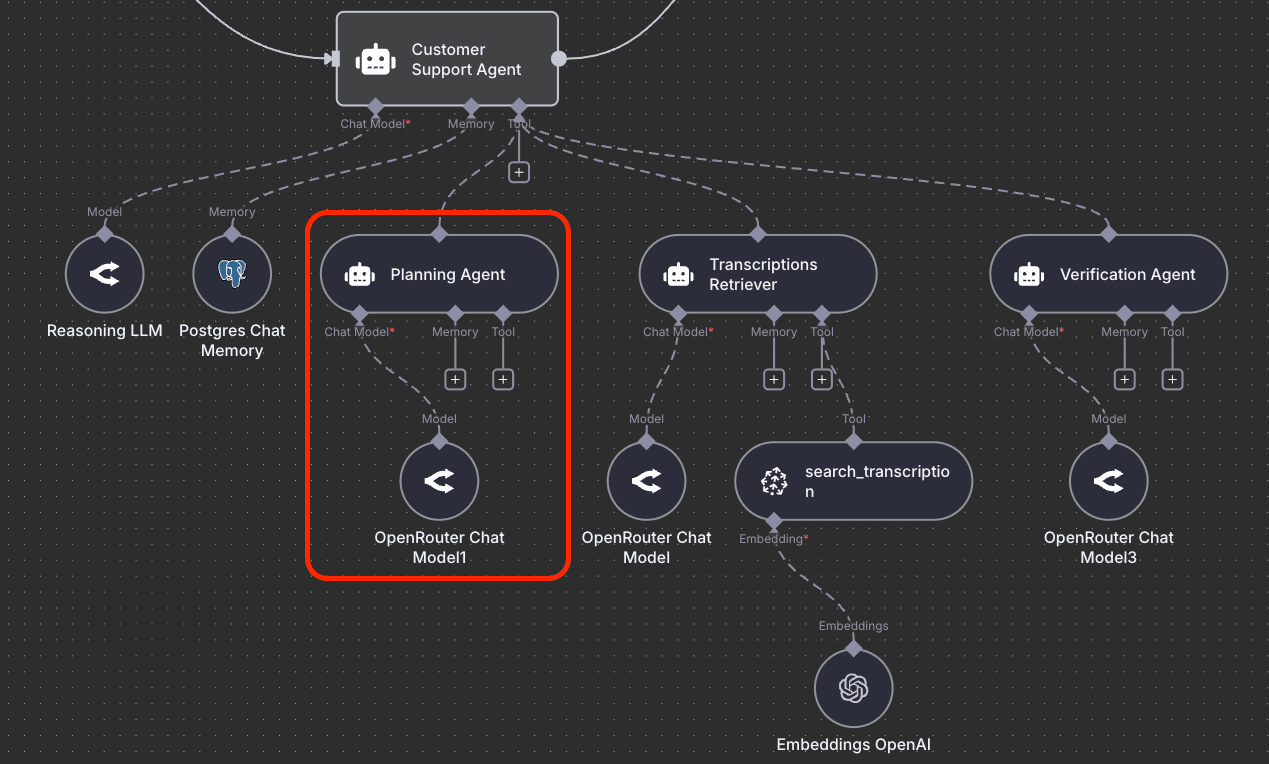

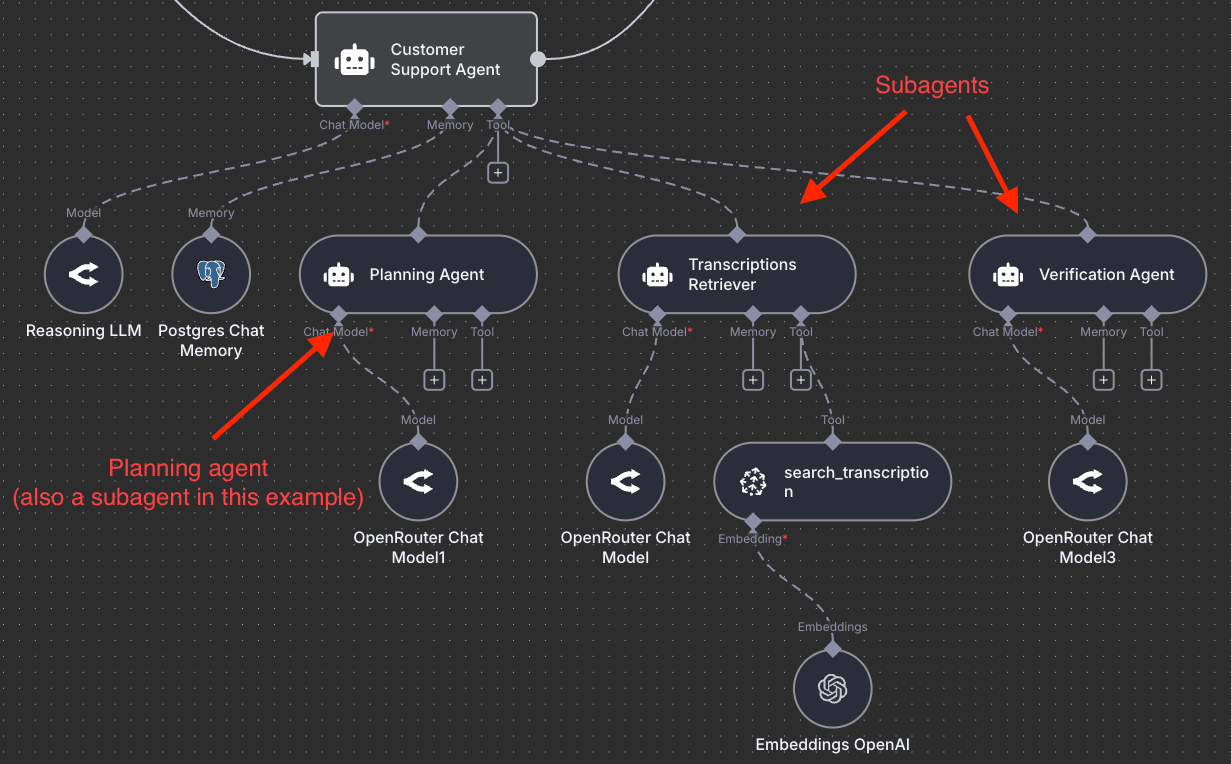

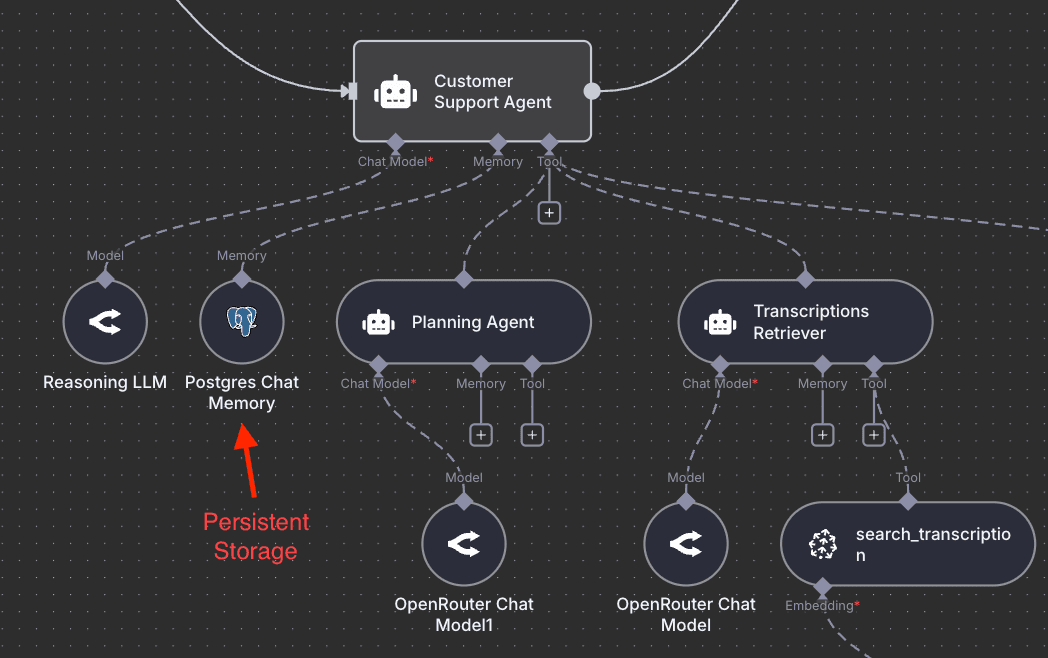

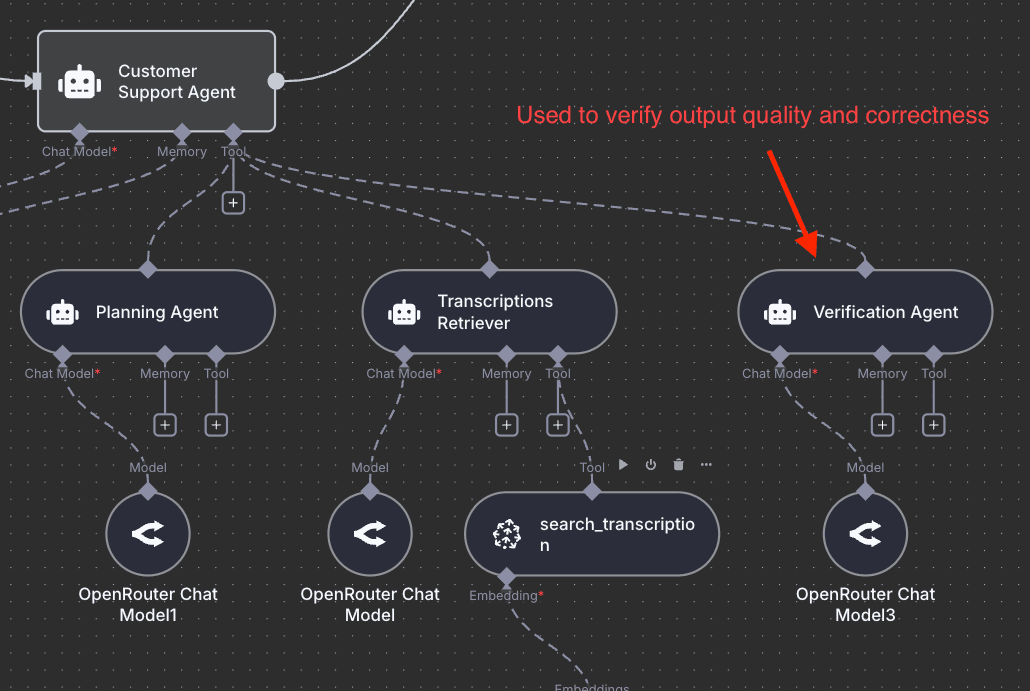

To better illustrate the idea of a deep agent, here is an example of a minimal deep agent built to power the DAIR.AI Academy’s customer support system, intended for students to ask questions regarding our courses:

Planning

Instead of reasoning ad hoc inside a single context window, Deep Agents maintain structured task plans they can update, retry, and recover from. Think of it as a living to-do list that guides the agent toward its long-term goal. To experience this, just try out Claude Code or Codex for planning; the results are significantly better once you enable it before executing any task.

We have also written recently on the power of brainstorming for longer with Claude Code, and this shows the power of planning, expert context, and human-in-the-loop (your expertise gives you an important edge when working with deep agents). Planning will also be critical for long-horizon problems (think agents for scientific discovery, which comes next).

Orchestrator & Sub-agent Architecture

One big agent (typically with a very long context) is no longer enough. I’ve seen arguments against multi-agent systems and in favor of monolithic systems, but I’m skeptical about this.

The orchestrator-sub-agent architecture is one of the most powerful LLM-based agentic architectures you can leverage today for any domain you can imagine. An orchestrator manages specialized sub-agents such as search agents, coders, KB retrievers, analysts, verifiers, and writers, each with its own clean context and domain focus.

The orchestrator delegates intelligently, and subagents execute efficiently. The orchestrator integrates their outputs into a coherent result. Claude Code popularized the use of this approach for coding and sub-agents, which, it turns out, are particularly useful for efficiently managing context (through separation of concerns).

I wrote a few notes on the power of using orchestrator and subagents here and here.

Context Retrieval and Agentic Search

Deep Agents don’t rely on conversation history alone. They store intermediate work in external memory like files, notes, vectors, or databases, letting them reference what matters without overloading the model’s context. High-quality structured memory is a thing of beauty.

Take a look at recent works like ReasoningBank and Agentic Context Engineering for some really cool ideas on how to better optimize memory building and retrieval. Building with the orchestrator-subagents architecture means that you can also leverage hybrid memory techniques (e.g., agentic search + semantic search), and you can let the agent decide what strategy to use.

Context Engineering

One of the worst things you can do when interacting with these types of agents is to give underspecified instructions/prompts. Prompt engineering was and is important, but we will use the new term context engineering to emphasize the importance of building context for agents.

The instructions need to be more explicit, detailed, and intentional to define when to plan, when to use a sub-agent, how to name files, and how to collaborate with humans. Part of context engineering also involves efforts around structured outputs, system prompt optimization, compacting context, evaluating context effectiveness, and optimizing tool definitions.

Read our previous guide on context engineering to learn more: Context Engineering Deep Dive

Verification

Next to context engineering, verification is one of the most important components of an agentic system (though less often discussed). Verification boils down to verifying outputs, which can be automated (LLM-as-a-Judge) or done by a human. Because of the effectiveness of modern LLMs at generating text (in domains like math and coding), it’s easy to forget that they still suffer from hallucination, sycophancy, prompt injection, and a number of other issues. Verification helps make your agents more reliable and more production-ready. You can build good verifiers by leveraging systematic evaluation pipelines.

Final Words

This is a huge shift in how we build with AI agents. Deep agents also feel like an important building block for what comes next: personalized proactive agents that can act on our behalf. I will write more on proactive agents in a future post.

If you want to catch upcoming posts on how to effectively build AI Agents, consider upgrading to our paid tier:

I’ve also been teaching these ideas to agent builders over the past couple of months. If you are interested in more hands-on experience for how to build deep agents, check out the new course in our academy: https://dair-ai.thinkific.com/courses/agents-with-n8n

The figures you see in the post describe an agentic RAG system that students need to build for the course final project.

This post is based on our new course “Building Effective AI Agents with n8n”, which provides comprehensive insights, downloadable templates, prompts, and advanced tips for designing and implementing deep agents.

So I subscribed to a paid version, but don't see anything different with it. Thanks for the article of course, but it seems paid is the same with free.

Great breakdown. In my structure, l argue for the Verification agent to be split into multiple subagents (I refer to these as Antagonists) and have seen a spike in quality, with only a small drag on cycle times per task.

The shift from shallow to deep agents represents a real opportunity in terms of quality of output and the future of AI utility. Thanks for sharing your thoughts on this!