🤖AI Agents Weekly: Project Genie, Kimi K2.5, Interactive Tools in Claude, Qwen3-Max-Thinking, Mistral Vibe 2.0, Agentic Vision

Project Genie, Kimi K2.5, Interactive Tools in Claude, Qwen3-Max-Thinking, Mistral Vibe 2.0, Agentic Vision

In today’s issue:

DeepMind launches Project Genie world model

Kimi K2.5 introduces Agent Swarm technology

Claude gets interactive tools via MCP Apps

Alibaba releases Qwen3-Max-Thinking

Mistral launches Vibe 2.0 coding agent

Google introduces Agentic Vision in Gemini 3 Flash

Anthropic study on AI assistance and coding skills

Dario Amodei on the adolescence of technology

AGENTS.md outperforms skills in agent evals

Cursor details secure codebase indexing

And all the top AI dev news, papers, and tools.

Top Stories

Project Genie: Google DeepMind’s AI World Model

Google DeepMind launched Project Genie (Genie 3), an AI world model that generates dynamic, navigable environments in real time. Now available to AI Ultra subscribers ($250/month) in the US, it represents a major step in commercializing world model research for training AI agents in rich simulation environments.

Real-time generation - Given a text prompt, Genie 3 generates interactive worlds at 24 fps and 720p resolution, simulating physics and interactions as users navigate. Generations are currently limited to 60 seconds.

Scene consistency - The model remembers and maintains the state of previously generated scenes, even if a user returns to the same spot a minute later, enabling coherent exploration across the environment.

World simulation - Unlike static 3D snapshots, Genie 3 learns environmental dynamics from observed action-consequence sequences rather than relying on traditional game engines or pre-programmed rules.

Path to AGI - DeepMind positions world models as key stepping stones toward AGI, enabling training of AI agents in unlimited simulation environments across robotics, animation, fiction, real-world locations, and historical settings.

Kimi K2.5

Moonshot AI released Kimi K2.5, a native multimodal model built on Kimi K2 with approximately 15 trillion mixed visual and text tokens. The model introduces Agent Swarm technology and strong visual coding capabilities, positioning it as one of the most capable open-source models available.

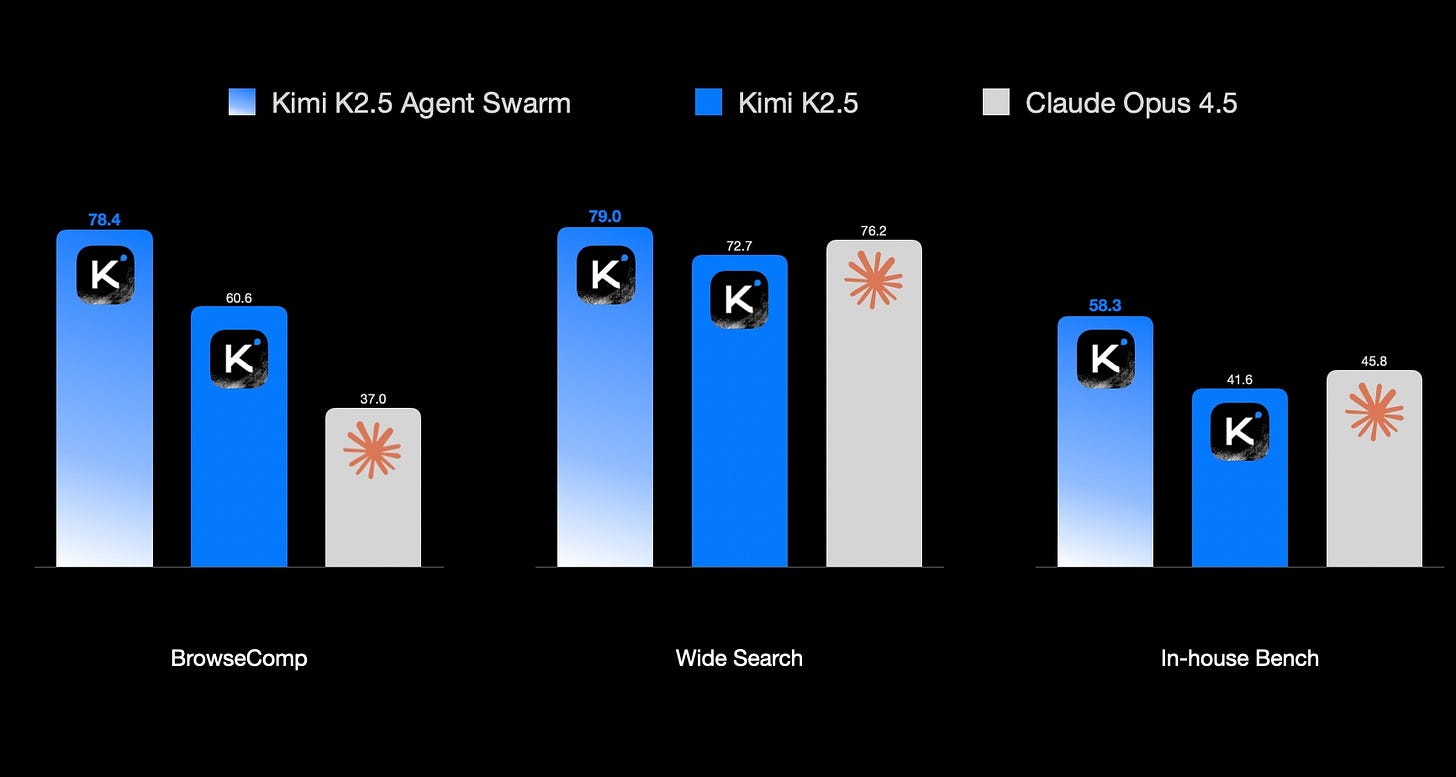

Agent Swarm technology - K2.5 can coordinate up to 100 sub-agents executing parallel workflows across up to 1,500 tool calls, reducing execution time by up to 4.5x compared to single-agent setups without predefined workflows.

Visual coding - Excels at converting conversations into complete front-end interfaces with interactive layouts and animations, with particular strength in image/video-to-code generation and visual debugging.

Office productivity - Handles high-density, large-scale office work end to end, producing documents, spreadsheets, PDFs, and presentations with 59% and 24% improvements over K2 Thinking on internal benchmarks.

Broad access - Available through Kimi.com, the Kimi App, the API platform, and Kimi Code, a terminal-based coding tool compatible with VSCode, Cursor, and Zed.