🤖 AI Agents Weekly: Omnilingual ASR, GPT-5.1, SIMA 2, Context Engineering Whitepaper, Mini-Agent, Marble World Model

Omnilingual ASR, GPT-5.1, SIMA 2, Context Engineering Whitepaper, Mini-Agent, Marble World Model

In today’s issue:

Microsoft’s AsyncThink enables concurrent AI agent reasoning

Google releases whitepaper on context engineering for agents

Fei-Fei Li explores spatial intelligence as AI’s next frontier

OpenAI launches GPT-5.1 with adaptive reasoning capabilities

DeepMind unveils SIMA 2 gaming agent with self-learning

LangChain DeepAgents 0.2 introduces pluggable backends

Meta’s Omnilingual ASR supports 1,600+ languages

Research shows AI agents boost developer productivity 39%

ElevenLabs Scribe v2 achieves sub-150ms speech transcription

Top AI dev news, product updates, and more.

Top Stories

The Era of Agentic Organization

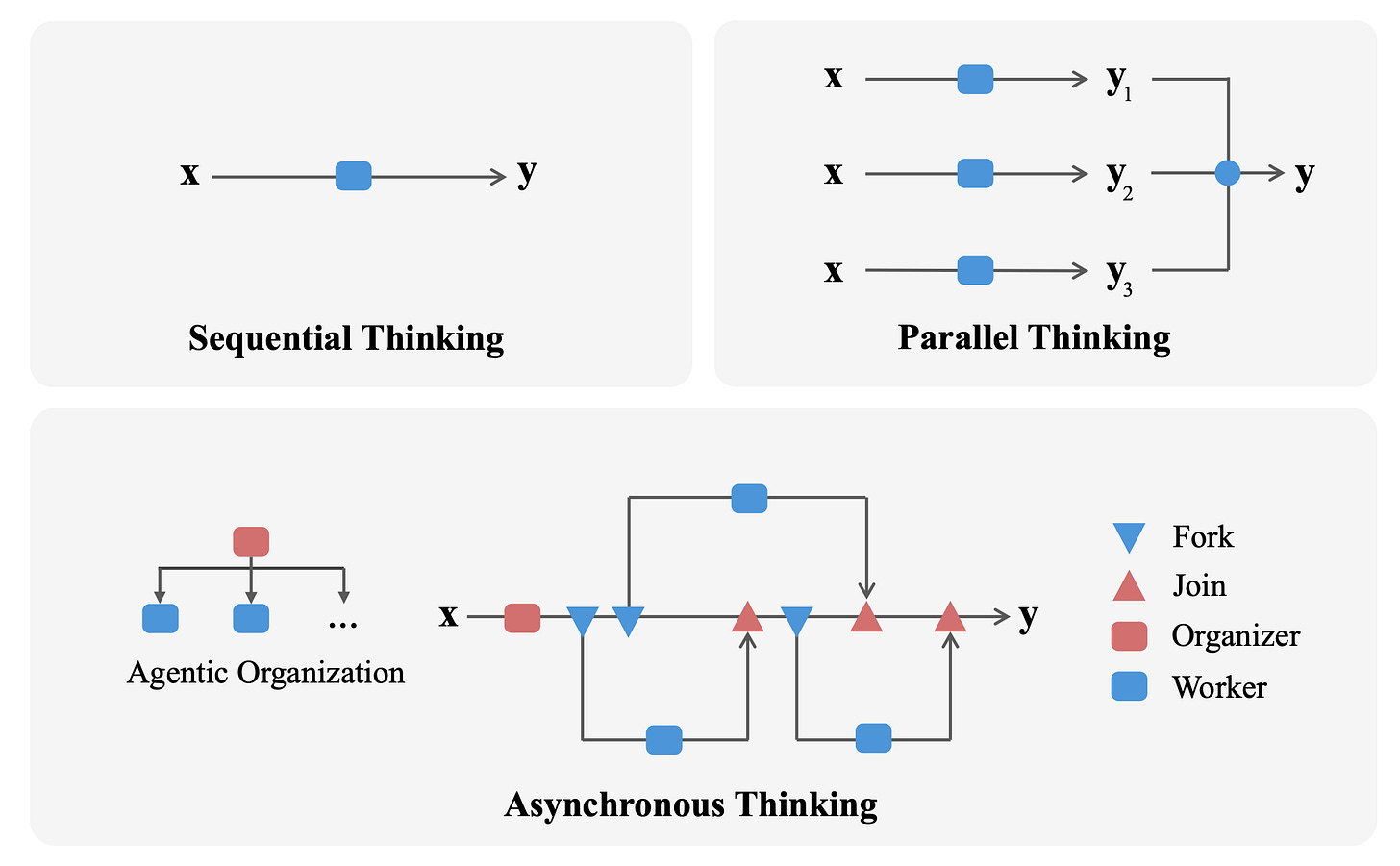

Microsoft Research introduces AsyncThink (Asynchronous Thinking), a breakthrough reasoning paradigm where LLMs learn to organize their internal thinking into concurrently executable structures through an organizer-worker protocol. Moving beyond sequential and parallel thinking, AsyncThink enables agents to collaboratively solve complex problems by dynamically decomposing tasks and executing sub-queries concurrently.

Organizer-worker thinking protocol: An organizer dynamically assigns sub-queries to workers using Fork and Join actions. The entire protocol operates through pure text generation, making it compatible with existing LLMs without architectural changes.

Learning to organize through RL: Two-stage training with cold-start format fine-tuning followed by reinforcement learning using Group Relative Policy Optimization. Rewards encourage correctness, format compliance, and thinking concurrency.

Superior accuracy-latency frontier: AsyncThink achieves 28% lower inference latency compared to parallel thinking while improving accuracy on mathematical reasoning. On AIME-24, it matches parallel thinking’s 38.7% accuracy with 1,468 latency versus 2,048.

Remarkable generalization: Models trained solely on multi-solution countdown tasks demonstrate zero-shot asynchronous thinking on previously unseen domains, including Sudoku, graph theory, and genetics problems.

Scalability pathways: Future directions include massive agent pools with heterogeneous expert workers, recursive agentic organization where workers can become sub-organizers, and human-AI collaborative frameworks.