🤖AI Agents Weekly: GPT-5.3-Codex-Spark, GLM-5, MiniMax M2.5, Recursive Language Models, Harness Engineering, Agentica, and More

GPT-5.3-Codex-Spark, GLM-5, MiniMax M2.5, Recursive Language Models, Harness Engineering, Agentica, and More

In today’s issue:

OpenAI releases GPT-5.3-Codex-Spark

Zhipu AI launches GLM-5 with Agent Mode

MiniMax drops the M2.5 open-source model

Recursive Language Models replace context stuffing

OpenAI ships 1M lines with zero manual code

Agentica pushes ARC-AGI-2 with recursive agents

Chrome launches WebMCP early preview

Anthropic raises $30B at $380B valuation

Excalidraw launches official MCP server

Hive agent framework evolves at runtime

Waymo begins 6th-gen autonomous operations

Gemini 3 Deep Think solves 18 open problems

And all the top AI dev news, papers, and tools.

Top Stories

GPT-5.3-Codex-Spark

OpenAI released GPT-5.3-Codex-Spark, their most capable agentic coding model, combining frontier coding performance with reasoning and professional knowledge capabilities while running 25% faster than its predecessor. It is also OpenAI’s first model that was instrumental in creating itself.

Self-developing model: The Codex team used early versions of GPT-5.3 to debug its own training, manage deployment, and diagnose test results and evaluations, making it the first model instrumental in its own development.

Beyond coding: Handles professional knowledge-work outputs like presentations, spreadsheets, and documentation. On GDPval, a knowledge-work benchmark, it wins or ties in 70.9% of evaluations.

Cybersecurity concerns: OpenAI rates this as their first model hitting “high” for cybersecurity capability under their Preparedness Framework, meaning it could meaningfully enable real-world cyber harm if automated. They announced a $10M API credits program for cyber defense research in response.

GLM-5

Zhipu AI launched GLM-5, a 744B-parameter MoE model with 40B active parameters, engineered from the ground up for agentic intelligence and multi-step reasoning. Trained entirely on Huawei Ascend chips using the MindSpore framework, it represents full independence from US-manufactured semiconductor hardware.

Agent Mode: Native capability for autonomous task decomposition, breaking high-level objectives into subtasks with minimal human intervention. Can transform raw prompts into professional documents in .docx, .pdf, and .xlsx formats.

Training scale: Ingested 28.5 trillion tokens during pre-training, a 23.9% increase over GLM-4.7. Uses a novel RL technique that achieves record-low hallucination rates.

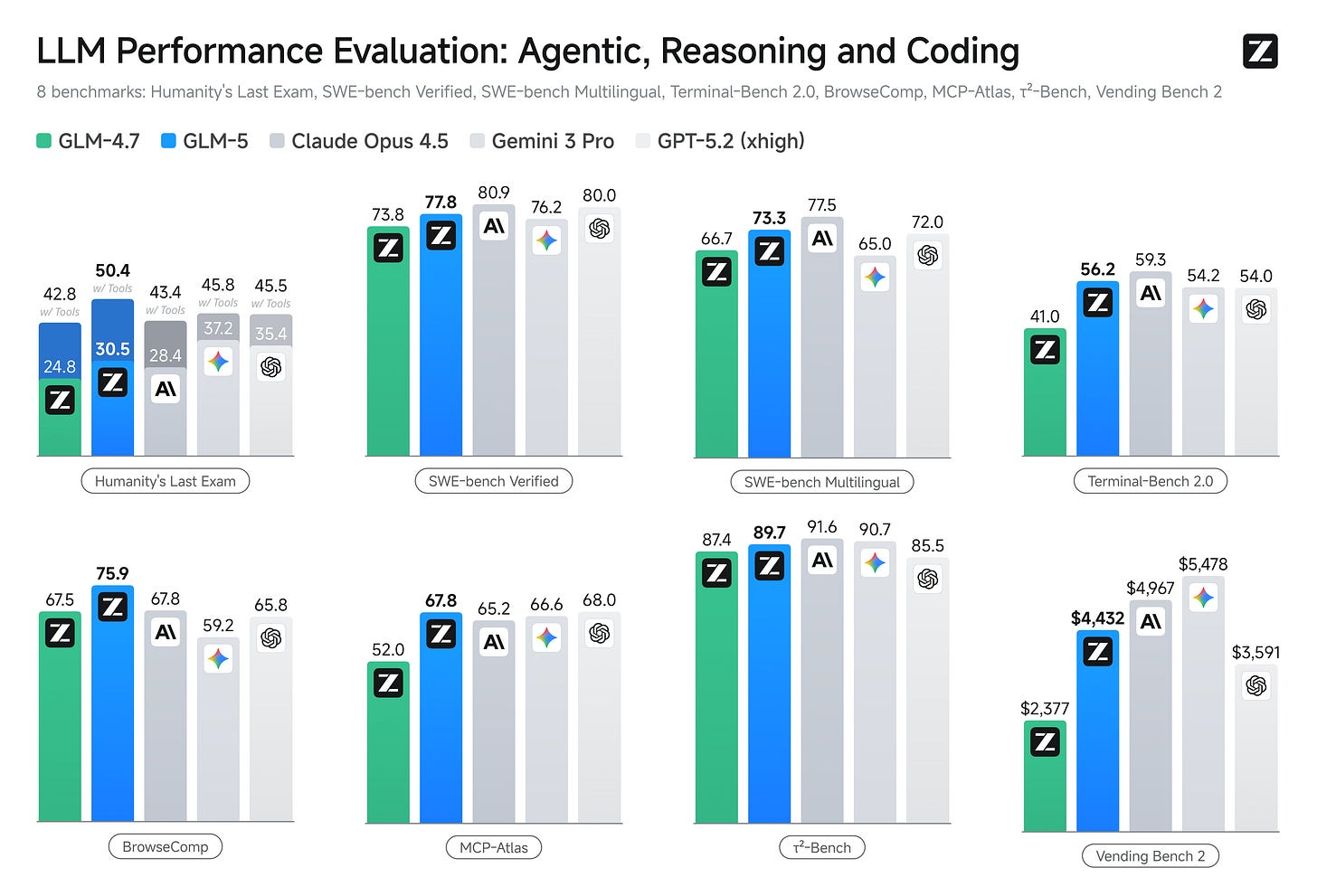

Results: Competitive with frontier models across coding, creative writing, and complex problem-solving tasks.

Open source and affordable: Released under MIT license with open weights. Available on OpenRouter at approximately $0.80 per million input tokens and $2.56 per million output tokens, roughly six times cheaper than comparable proprietary models.