🤖AI Agents Weekly: Gemini 3, Nano Banana Pro, Antigravity, Agent-R1 RL Framework, Meta's SAM 3, OLMo 3

Gemini 3, Nano Banana Pro, Antigravity, Agent-R1 RL Framework, Meta's SAM 3, OLMo 3

In today’s issue:

Google announces Gemini 3 with state-of-the-art reasoning and multimodal AI

Meta launches SAM 3 with promptable concept segmentation

AI2 releases OLMo 3: Fully open-source language models

xAI launches Grok 4.1

OpenAI releases GPT-5.1 Codex Max for long-running coding tasks

Google introduces Nano Banana Pro

mgrep: Semantic search for code repositories (2x token efficiency)

Google launches CodeWiki for AI-powered documentation

OpenAI’s evals framework: Measuring AI system reliability and business impact

Google launches Antigravity agentic development platform

Top AI devs news, tools, and research papers

Top Stories

Google Announces Gemini 3

Google launched Gemini 3, its most intelligent AI model to date, combining state-of-the-art reasoning capabilities with advanced multimodal understanding and agentic functionality. The release marks the first time Google has launched a Gemini model across multiple products on day one, with immediate availability in the Gemini app, Google AI Studio, Vertex AI, and Google Search.

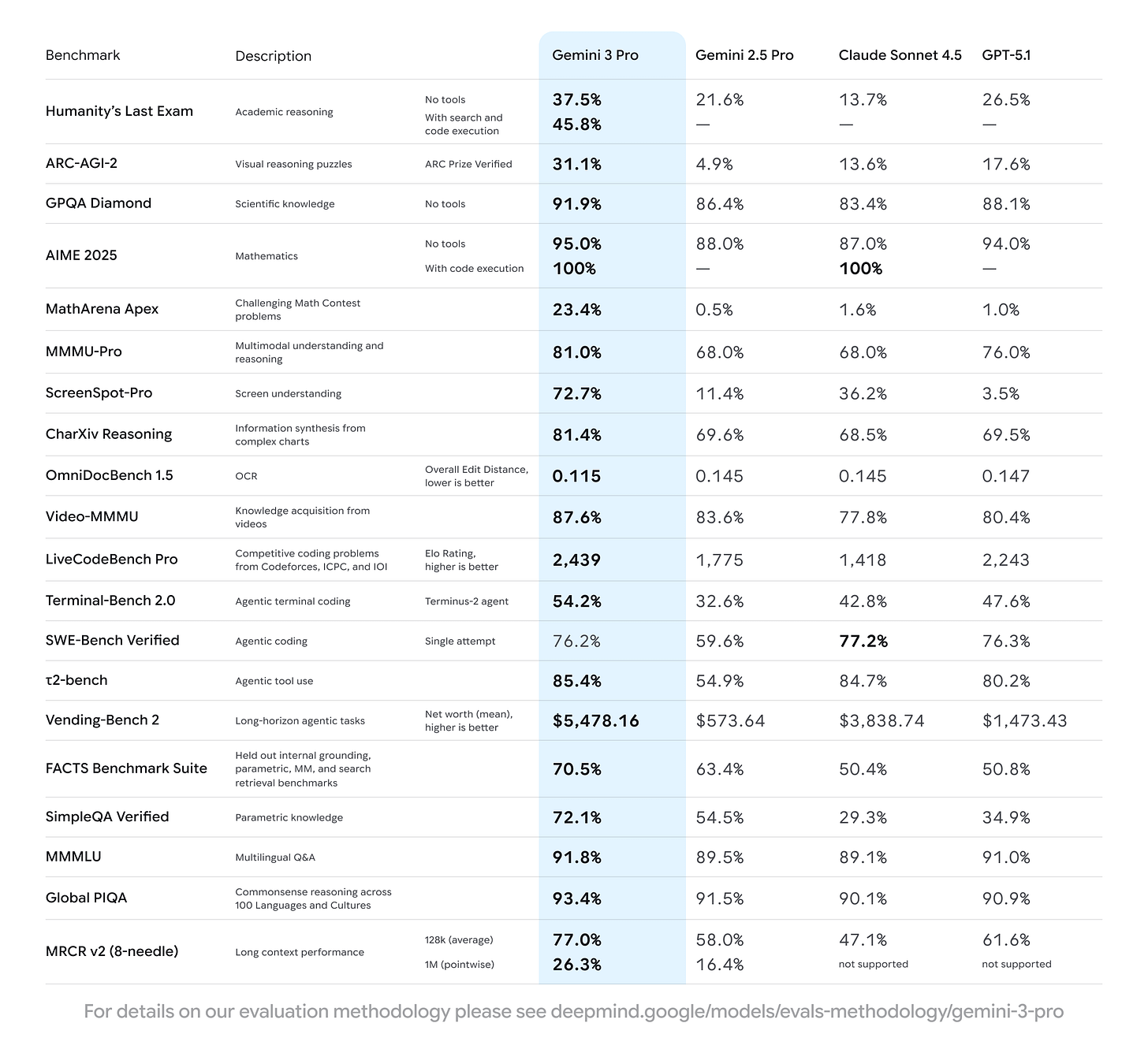

Record-breaking benchmark performance: Achieved 95% on AIME 2025 (100% with code execution), 37.5% on Humanity’s Last Exam without tools, 91.9% on GPQA Diamond for PhD-level reasoning, and 23.4% on MathArena Apex. The model scored 1501 Elo on LMArena, surpassing Gemini 2.5 Pro’s 1451, with breakthrough multimodal scores of 81% on MMMU-Pro and 87.6% on Video-MMMU.

Deep Think mode for complex reasoning: Introduces an advanced reasoning mode that achieves 41% on Humanity’s Last Exam (vs 37.5% standard), 93.8% on GPQA Diamond, and an unprecedented 45.1% on ARC-AGI with code execution, enabling the model to tackle problems requiring extended deliberation.

Generative UI capabilities: Enables LLMs to generate both content and entire user experiences, including web pages, games, tools, and applications that are automatically designed and fully customized in response to any prompt. Features native multimodal processing of text, images, video, audio, and code with a 1 million-token context window.