🤖 AI Agents Weekly: DINOv3, Claude Sonnet-1M, GLM-4.5V, Benchmarking AI Agent Memory, Deep Agents, Claude Code Output Styles

DINOv3, Claude Sonnet-1M, GLM-4.5V, Benchmarking AI Agent Memory, Deep Agents, Claude Code Output Styles

In today’s issue:

Claude Sonnet expands to 1M-token context window

Z.AI introduces GLM-4.5V

Meta introduces DINOv3

Benchmarking AI Agent Memory

Mistral Medium 3.1

Claude Code Output Styles

Qodo Command scores 71.2% on SWE-bench Verified

Evaluating LLM Agents on long-horizon tasks

Designing memory-augmented AR agents

Top AI devs news, research, and product updates.

Top Stories

Claude Sonnet 4 Expands to 1M-token Context

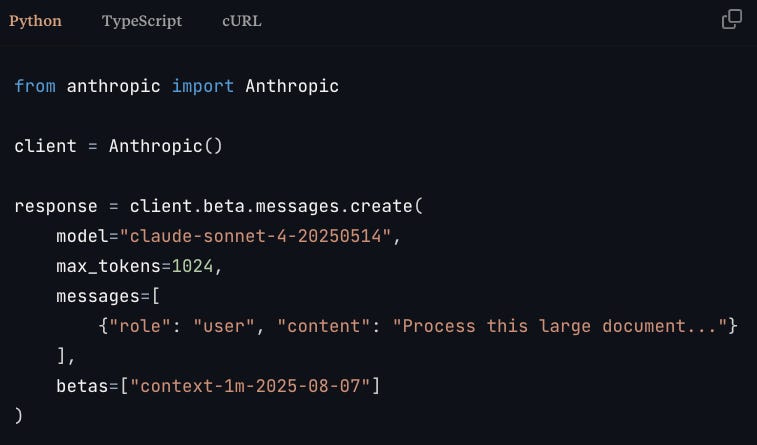

Anthropic has increased Claude Sonnet 4’s context window to 1 million tokens, a 5× jump, now in public beta on the Anthropic API and Amazon Bedrock, with Google Cloud’s Vertex AI coming soon. This enables processing entire codebases, large document sets, or multi-step agent workflows in one request.

Expanded capabilities – Handle over 75K lines of code or dozens of research papers with full cross-reference capability.

New use cases – Large-scale code analysis, comprehensive document synthesis, and persistent context for multi-step, tool-using agents.

Pricing – Prompts ≤200K tokens: $3/MTok input, $15/MTok output; >200K tokens: $6/MTok input, $22.50/MTok output. Prompt caching and batch processing can cut costs up to 50%.

Customer adoption – Bolt.new reports improved large-project coding accuracy; iGent AI says the upgrade enables multi-day, production-scale engineering sessions for their autonomous software agent.

Availability – Currently for Tier 4/custom API users, with broader rollout planned; also on Amazon Bedrock and soon on Vertex AI.

💡 Here are our thoughts on why this matters:

Full-system visibility – You can feed entire codebases (including tests, configs, and docs) into the model without chunking or elaborate retrieval pipelines. This makes it possible to run whole-architecture reasoning, detect cross-file dependency issues, and refactor with full global awareness.

Richer agent memory – Multi-step, tool-using agents can now carry the entire history of a session, API specs, execution logs, and prior tool outputs, inside the model without losing coherence, reducing error cascades from truncated context.

Better alignment and consistency – In research workflows, you can load all relevant papers, experiments, and notes at once. The model can maintain a consistent analytical thread instead of stitching together partial results from smaller contexts.

Simplified pipeline design – With fewer retrieval and chunking steps, devs can cut complexity, latency, and failure points in AI applications, which is especially valuable for latency-sensitive or mission-critical systems.

New frontier for model evaluation – This scale opens doors for testing long-horizon reasoning, code synthesis across massive repos, and multi-day agentic workflows, use cases that were previously impractical without extensive orchestration.