🤖 AI Agents Weekly: Code World Model, Gemini Robotics-ER 1.5, Figma MCP server, Overhearing LLM Agents, Qwen3-Max, Gamma API

Code World Model, Gemini Robotics-ER 1.5, Figma MCP server, Overhearing LLM Agents, Qwen3-Max, Gamma API

In today’s issue:

OpenAI introduces ChatGPT Pulse

Google releases Gemini Robotics-ER 1.5

On overhearing LLM Agents

Figma introduces the Figma MCP server

Meta releases Code World Model

Qwen releases Qwen3-Max

Coral Protocol just launched Coral v1

Gamma launched its API to generate presentations

Alibaba released Qwen3-Omni

Top AI news, papers, and product updates.

Top Stories

Overhearing LLM Agents

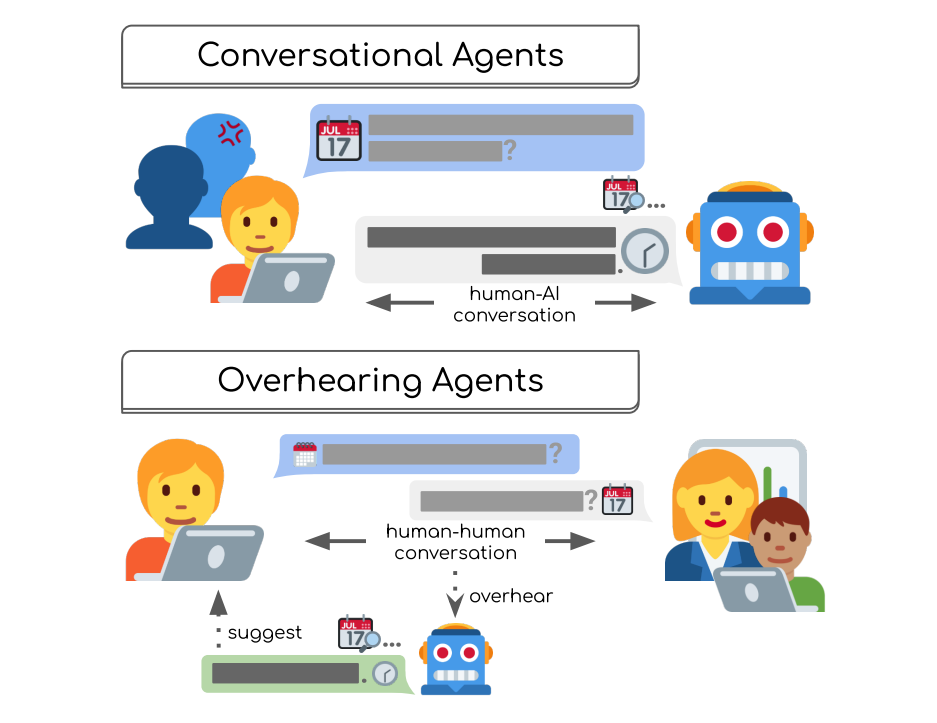

A first survey of overhearing agents that quietly monitor ambient human activity and assist only when useful, contrasting them with standard chat-style conversational agents. The paper defines a taxonomy, design principles, and open problems to guide research and system building.

Paradigm and scope. Overhearing agents listen to multi-party activity (audio, text, video) and act without direct dialogue. They infer goals, time interventions, and route actions through tools while keeping language output mostly for internal reasoning rather than user-facing chat.

Taxonomy of interactions. Three user-facing axes:

• Initiative: always-active, user-initiated, post-hoc analysis, and rule-based activation.

• Input modality: audio, text, video (often combined).

• Interfaces: web/desktop, wearables, and smart-home surfaces for discreet, timely surfacing.System design dimensions.

• State: read-only retrieval vs read-write actions that change the environment.

• Timeliness: real-time support vs asynchronous tasks queued for later review.

• Interactivity: foreground suggestions vs background tool use to quietly update internal world models.Design principles and tooling. Emphasizes privacy and consent, on-device options, PII redaction, and encrypted storage. UX guidance: glanceable and verifiable suggestions, easy dismissal, reversible and editable actions, and smart queues that prioritize time-sensitive items. Recommends modular, LLM-agnostic, asynchronous-first tool interfaces that developers can compose in plain Python, with audio/video I/O and mobile affordances.

Open research challenges. Five directions: detect optimal intervention points in continuous streams; measure real utility and reduce suggestion fatigue; raise multimodal throughput with information-aware tokenization; build libraries that natively support audio/video, async, and mobile; negotiate consent in multi-party settings with selective processing.