🤖AI Agents Weekly: Agentic Reasoning Survey, Claude's New Constitution, Devin Review, Codex Agent Loop, The Assistant Axis, D4RT, Skills.sh

Agentic Reasoning Survey, Claude's New Constitution, Devin Review, Codex Agent Loop, The Assistant Axis, D4RT, Skills.sh

In today’s issue:

Comprehensive survey on agentic reasoning for LLMs

Anthropic releases Claude’s new constitution

Cognition launches Devin Review for AI-powered code review

OpenAI unrolls how the Codex agent loop works

Anthropic research reveals the “Assistant Axis” in LLMs

Google DeepMind introduces D4RT for 4D scene reconstruction

World Labs launches API for generating navigable 3D environments

Palantir details securing agents in production with Agentic Runtime

And all the top AI dev news, papers, and tools.

Top Stories

Agentic Reasoning for LLMs

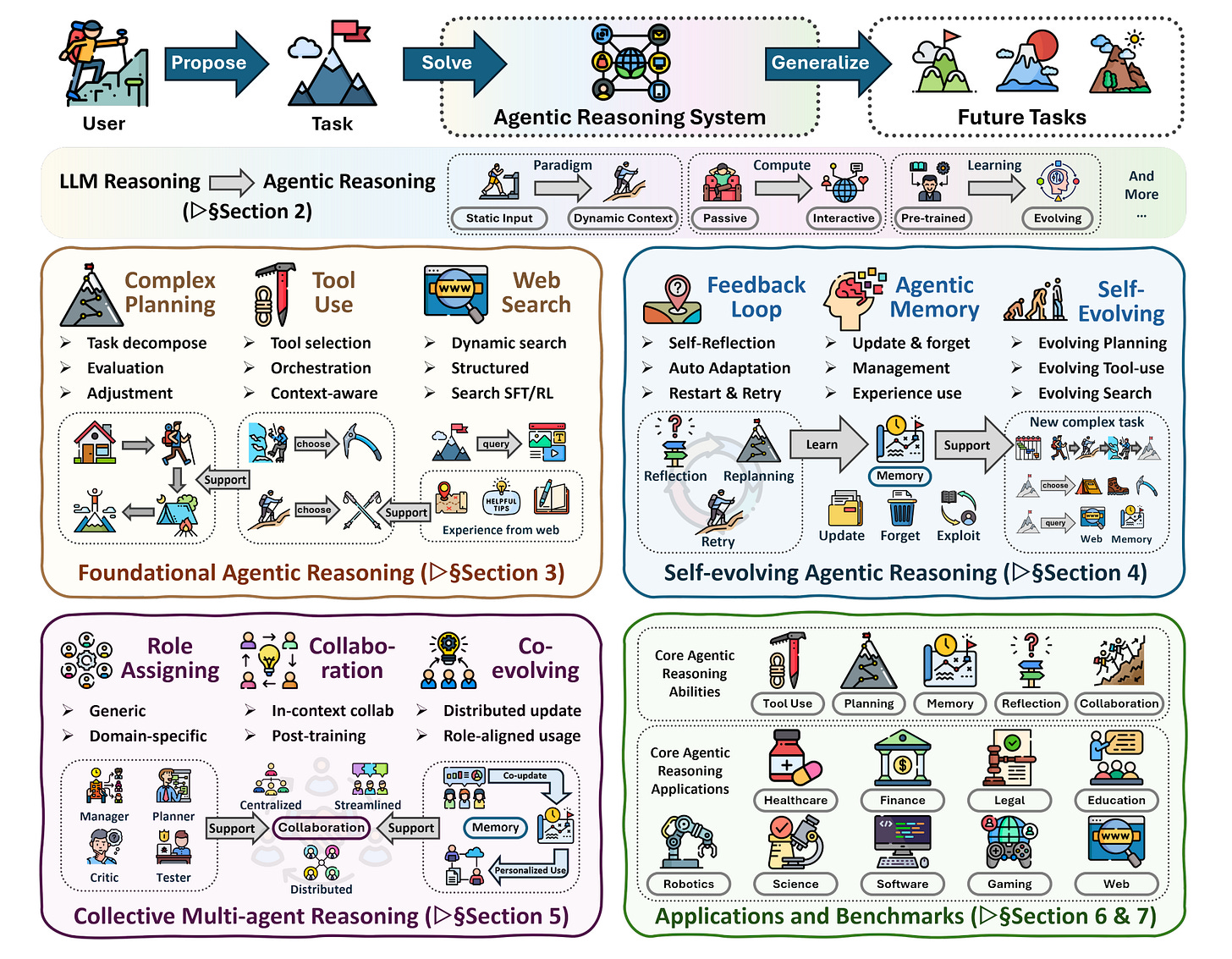

A team of 30+ researchers published a comprehensive survey on agentic reasoning, providing a unified framework for understanding how LLMs function as autonomous agents. The paper structures the field across three complementary layers that bridge cognitive processes with actionable behaviors.

Three-layer framework – The survey organizes agentic reasoning into foundational (single-agent planning, tool use, search), self-evolving (feedback, memory, adaptation), and collective levels (multi-agent coordination and knowledge sharing).

Reasoning approaches – Distinguishes between in-context reasoning that scales test-time interaction and post-training reasoning using reinforcement learning and fine-tuning techniques.

Applied domains – Reviews frameworks across science, robotics, healthcare, autonomous research, and mathematics, demonstrating the breadth of agentic applications.

Open challenges – Identifies key research directions, including personalization, extended interaction horizons, world modeling, scalable multi-agent training, and governance considerations for deploying autonomous agents.

Unrolling the Codex Agent Loop

OpenAI published a deep dive into how the Codex agent operates, revealing the iterative process that powers their coding assistant. The architecture follows a straightforward loop: take the prompt, call the model, execute tool calls, and append results, then repeat until returning a text response.

Reasoning token persistence – Reasoning tokens persist throughout the tool-calling loop but are discarded after each user turn, creating context preservation within tasks but potential information loss between interactions.

Workaround strategies – Developers have adopted writing progress updates to markdown files as persistent snapshots across context windows, effectively bridging gaps between separate user interactions.

Lightweight architecture – The Codex CLI has received praise for its performance, memory efficiency, and open-source codebase compared to alternatives, though users note limitations in visibility into model reasoning during execution.

Missing features – Current limitations include the inability to interrupt mid-process, a lack of hook support (which developers claim could reduce token consumption by 30%), and automatic changes without clear diff previews.